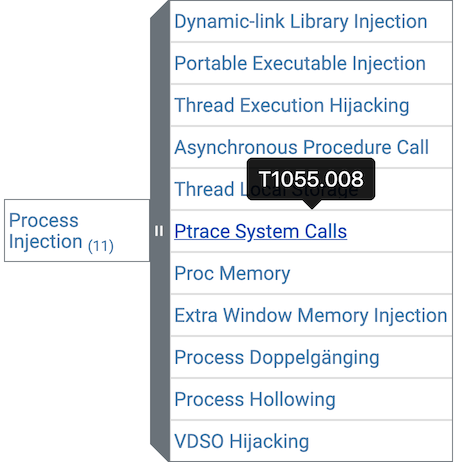

Earlier this summer, MITRE released a new version of the ATT&CK framework, reorganizing the matrix to include sub-techniques. The move solved a growing problem: as the framework had expanded over the years, there were major discrepancies in the scope of certain techniques. Some techniques, like DLL Search Order Hijacking, were specific and referred to a single, narrowly defined behavior. Others, like Process Injection, were broad and accounted for many different kinds of behaviors.

If you think about ATT&CK from the perspective of detection engineering and coverage, scoping is very important. To that point, sub-techniques alleviate ambiguity around the scope of detection for a given technique and allow us to more confidently assess detection coverage for that technique. They also allow us to better scope our research, hypotheses about detection analytics, and test development.

While transitioning to sub-techniques solves a lot of problems in the long term, it can create many challenges to overcome in the short term. In this blog, we’re going to offer some high-level advice that should be useful for anyone considering a transition to sub-techniques, before describing in detail how we managed this process on our end.

There was a significant amount of work involved in the transition, but the challenges were far from insurmountable and only increased our already deep appreciation for MITRE ATT&CK.

The new and improved ATT&CK matrix can be found at https://attack.mitre.org/

Our advice for mapping to sub-techniques

You can read about the context around our full process below, but here’s the TL;DR if you’re tackling a sub-technique remapping yourself:

- Figure out everywhere that you use ATT&CK—from reports to analytics to visualizations—and decide who’s in charge of remapping each piece.

- Determine if you want to fundamentally reimagine the way you’re mapping techniques. Are you optimizing for speed or accuracy? Can you add more automation? Should there be more human reviews?

- Determine if you want to take a “lean tiger team” approach and have a few people do the remapping, or if you want to divide and conquer among more people. It’s easier to maintain consistency with a small team but requires more time, whereas a larger team might move faster but with less consistency. If you take the latter approach, we recommend creating a style guide and having a secondary review process to ensure consistency.

- Take this opportunity to clean up any legacy issues you have with mapping to ATT&CK: do you have deprecated techniques? Are there other inconsistencies you can improve upon? While you’re doing the work, you might as well tackle these too.

- Set realistic deadlines. Remember that whoever is doing this remapping probably has other work too, so set a schedule and determine the check-in points along the way you need to stay on track.

First things first: how do we use ATT&CK?

The very first thing we had to do was figure out all of the different ways we use ATT&CK at Red Canary.

Red Canary provides managed detection and response (MDR), open source tools, and educational resources—all of which depend on ATT&CK in one way or another.

Red Canary MDR

The thousands of behavioral analytics we’ve developed to surface potential threats in our customers’ environments are all mapped to ATT&CK. This allows us to communicate more effectively with our customers and the community about detection coverage and the techniques that adversaries are leveraging in the wild.

Atomic Red Team

The library of tests that make up our open source platform for simulating adversary techniques, Atomic Red Team, are also organized to align with ATT&CK. If ATT&CK is a taxonomy of threats that categorizes and describes the techniques an adversary might leverage in an attack, then Atomic Red Team is a schema and collection of tests for defining and exercising those techniques, respectively. In this way, Atomic Red Team is intrinsically bound to techniques (or sub-techniques) in the ATT&CK matrix.

Educational resources

Since much of the content we produce is based on the things we detect and our open source tooling, ATT&CK has also framed the way we talk about threats in the educational content and research we produce, whether it’s an article on our blog, our quarterly ATT&CK webinar series, or our annual Threat Detection Report.

Detection analytics and atomic tests

As you can see, a lot of what we do at Red Canary has some relation to ATT&CK, and so our transition to sub-techniques affects everyone from our customers to the Atomic Red Team community to the people who read our research. Once we’d accounted for everything that would be affected by this change, it was time to divide and conquer and get the actual work done.

As it turns out, the process for transitioning detection analytics to sub-techniques was fundamentally similar to the process of transitioning atomic tests. This makes perfect sense when you consider that: a) an atomic test is effectively a self-contained script designed to exercise a malicious behavior and b) a detection analytic is similarly self-contained Ruby code designed to catch a malicious behavior. As such, we needed to review the code or scripts, examine the mapping decisions we’d previously made, and realign them to sub-techniques as needed.

On background

Analytics

To provide a bit of background, our MDR platform gathers telemetry from customer endpoints and parses it against a set of behavioral detection analytics. We have more than 1,000 of them that are currently active, and here’s a simplified example of what one of them might look like:

- A process that resembles

winword.exespawning a process that resemblespowershell.exeand a command line that includes an external network connection

When a telemetry pattern matches a detection analytic, our product creates an event that is analyzed by our detection engineering team. The above analytic, for example, might trigger on a malicious macro in a Word document spawning PowerShell and downloading malware from the internet. If the detection engineering team determines that a given event is malicious, we inform our customers that we’ve found a confirmed threat on one or more of their endpoints.

Whenever possible, these confirmed threats are mapped to an ATT&CK technique. The above analytic would probably map to both PowerShell and Spearphishing Attachment. This is important to understand: our ATT&CK mapping happens at the analytic level. In other words, our detection engineering and intel teams make technique mapping decisions while they are developing an analytic. This saves tremendous amounts of time by preventing our detection engineers from having to select a technique every time they ship a confirmed threat to a customer.

Atomics

Atomic Red Team tests are organized by ATT&CK techniques in the Atomics directory of the Atomic Red Team repository. Each technique has a folder that contains YAML and Markdown files with all of the tests for that technique. Organizing tests in this way promotes consistency and enables users to easily execute tests based on their respective technique. With the introduction of sub-techniques, we needed to come to an agreement about what would be the best way to organize a combination of sub-techniques and their overarching parent techniques, when applicable.

Ultimately, we decided that we would not treat sub-techniques any differently from parent techniques, organizing tests into parent or sub-technique folders as needed. The only meaningful difference between the two categories is that sub-techniques have a three-digit numeric suffix and a more tightly constrained scope. Thinking about it in this way led us to agree that it would be best to add sub-technique folders to the atomics root directory rather than creating sub-directories within the parent technique folders. This was an intuitive decision that ultimately didn’t create any additional complexity.

Divvying up the work

Once MITRE released sub-techniques, we knew that we’d have to re-examine all of our analytics and atomics and refactor them to account for this change.

We considered assigning the monumental task of re-mapping our library of more than 1,000 behavioral analytics and hundreds of atomics to just a few people. The benefit of limiting this work to a small group is that it could result in a higher level of consistency. However, since there were more than 1,000 detection rules and hundreds of tests to refactor, we decided to spread the work out across Red Canary’s entire detection engineering, intel, and research teams.

While this decision saved time, possibly at the expense of consistency, it also increased the team’s collective familiarity with common software engineering practices and, importantly, sub-techniques. That said, if you’re re-mapping analytics and you’ve got a more manageable number of them, it might be a good idea to assign that task to a single person.

Refactoring

Naturally, we wanted code to do most of the work for us. In order to get an initial sense of the work required to complete the transition, we used MITRE’s crosswalk JSON document and wrote a PowerShell script to report how many techniques were changing. We then built upon the script to automate updating existing techniques to reflect their new technique when one-to-one mapping existed. However, we quickly realized that transitioning to sub-techniques wasn’t going to be as easy as we naively had thought.

Atomics

The two primary tasks that remained were the following:

- Techniques that mapped to a parent technique for which there were multiple sub-techniques defined needed to be manually reviewed and placed into the proper sub-technique, when applicable. For example, many Process Injection sub-techniques were added so every process injection-related test had to be reviewed to determine which specific injection technique was used and to move it into its appropriate sub-technique folder.

- There were often MITRE technique ID references throughout many Atomic tests and that did not lend themselves to a scripted transition. Ultimately, it was decided that a full, manual review of Atomic tests was necessary so we portioned out chunks of tests among the Atomic Red Team maintainers.

Interestingly, as was the case with remapping detection analytics, the manual review was actually a fantastic forcing function. It gave us the opportunity to improve test consistency, fix typos, identify and remove duplicate tests, and more. A little spring cleaning never hurts and the sub-technique transition offered the ideal opportunity to do some housekeeping and to refamiliarize ourselves with the extensive suite of tests.

Analytics

In essence, we remapped everything we could automatically, and then we had our detection engineers, intel analysts, and researchers review everything. This manual review served two purposes:

- The obvious one: to make sure that all the scripts worked correctly

- The less obvious one: to reconsider how we’d mapped everything in the first place

The importance of step two here can’t be overstated. Sub-techniques offered us a great opportunity to examine our hypotheses about what each detection analytic would catch in the wild. It’s good and well to think that an analytic might alert on a certain type of behavior, but the only way to know for certain is to examine the things it actually caught in practice.

Threat Detection Report and other educational content

Now that we’ve fully updated our analytics and atomics, you should expect to see more of our educational and research content align with sub-techniques as well. Most notably the 2021 Threat Detection Report, which is due out sometime early next year, will likely list the prevalence of sub-techniques, but we may also examine the prevalence of parent techniques as well. If you have strong feelings one way or the other, we’d love to hear from you.

This might go without saying, but we don’t have any plans for retroactively transitioning content we’ve already produced so that it aligns with sub-techniques. As such, there may be some confusion in the coming weeks and months with some content making reference to the pre-sub-techniques ATT&CK matrix and other content referencing sub-techniques. Over time, you can expect to see more of the latter and less of the former.

What we learned: beyond the TL;DR

ATT&CK mapping is an art, not a science. People interpret techniques and how they should be mapped in different ways. Sometimes you design an analytic thinking it will catch one behavior but it ends up catching something completely different.

Our mapping was pretty good to begin with, but the refactor let us take a step back, reconsider earlier decisions, and get everyone on the same page. As a result, our behavioral analytics are much more consistently mapped than they were previously. One other realization that we’re still working on is that these mapping decisions should ideally be made after a threat is investigated and confirmed.

At present, we map our threats to ATT&CK on the analytic level. To reiterate, we make decisions about what behaviors a detection analytic will catch before they ever go into production. It works pretty well because, most of the time, we design analytics to catch a specific thing in theory and they catch that same specific thing in practice. However, a system of conditional mapping—one where you review more ambiguous detection analytics and require that a human review the mapping decisions AFTER the threat has been investigated and confirmed in its full context—could go a long way toward combating the inaccuracies that might emerge from mapping at the analytic level.

Less intuitively, the refactor also gave us an excellent opportunity to provide feedback to MITRE. We submitted a pair of new techniques to the framework, and we also shared some of what we learned in putting sub-techniques into practice. The MITRE ATT&CK team wants and needs feedback so they can keep improving the framework.

To that point, sub-techniques are a much needed improvement to ATT&CK that will allow us to more confidently assess detection coverage and describe threats. Huge thanks to MITRE for all the hard work they put into this!