“Do we detect this technique?” It’s a common question for defenders and detection engineers, but it’s also a loaded one that expects a binary “yes” or “no” response—and one in which “no” is implicitly understood to be an unacceptable answer.

As defenders and detection engineers, we know the nuance that lies beneath such an overly simplistic response, but it’s nonetheless our responsibility to answer that question confidently in the affirmative or negative. Confidence can be hard to obtain, though, given the following ambiguities:

- What optics are associated with this technique?

- How resilient are my detection hypotheses to evasion?

- What does this technique actually comprise? What technical components are in play that make up the technique?

- How do we define “sufficient” coverage?

Without sugarcoating anything, answering those questions can be extremely challenging, and reducing everything to a “yes” or “no” is a recipe for anxiety.

One of the ways that we’ve tried to tackle such ambiguous questions is to start by considering what it is exactly that we’re detecting. At Red Canary, what we’re detecting can often be reduced to specific instances or sets of behaviors. One definition of a behavior in the context of attacker tradecraft is a deliberate selection of procedures with the goal of achieving an objective. A procedure, as we define it, is an instance or example of a technique.

For example, if the technique is Mshta, the procedure could be mshta.exe downloading and executing HTA script code from a specific URI. And a behavior would be HTA script code that spawns a child process of powershell.exe. Beyond these (and this will become important in the coming paragraphs), there may also be an unknown number of variations on any given technique. Sticking with Mshta as an example, one such variation might include the execution of HTA script content inline in the command line rather than downloading it from a URI or dropping an HTA file to disk.

All of the HTA script code might eventually do the same thing: spawn a child powershell.exe process to execute additional malicious actions. However, an adversary can choose from a wide variety of technique variations to execute the same HTA script code, each of which may require slightly different detection logic.

Considering all of this, it seems that the foundation of a detection is made up of our ability to observe a technique, which may be composed of one or more variations. Going through this thought exercise, we arrive at a foundational question: Without regard for detecting suspicious or malicious behavior, can we observe a technique independent of its intent in the first place?

AtomicTestHarnesses is a free, open source tool that we’ve developed to help answer all of these questions.

What is AtomicTestHarnesses?

AtomicTestHarnesses is a PowerShell module that reduces the complexity required to understand and interact with attack techniques. It consists of PowerShell functions that execute specific attack techniques on a host and generate output designed to: 1) validate that the technique executed successfully and 2) surface relevant detection-specific telemetry.

Through our research process, we also go through painstaking lengths to identify all possible variations of an attacker technique (within reasonable and ultimately subjective bounds), and then implement them as well documented parameters for each function. AtomicTestHarnesses is designed to run without external dependencies but can also operate as a dependency by other tools or frameworks like Atomic Red Team.

In the most simple terms, AtomicTestHarnesses streamlines the execution of attack technique variations and validates that the expected telemetry surfaces in the process.

Accompanying our initial release are three functions designed to abstract the complexities away from and deliver contextual output for the following attack techniques:

- T1134.004: Access Token Manipulation: Parent PID Spoofing

- T1218.001: Signed Binary Proxy Execution: Compiled HTML File

- T1218.005: Signed Binary Proxy Execution: Mshta

While the initial array of attack techniques is small, we will gradually add new techniques over time as we research them and enumerate as many variations as possible. Prior to releasing additional technique functions, there are many boxes we’ll want to check internally to ensure, among other things, that relevant vendors are aware of potentially unique variations or vulnerabilities in their code.

Instructions for installing and using AtomicTestHarnesses can be found here.

So I’ve loaded the AtomicTestHarnesses module. Now what?

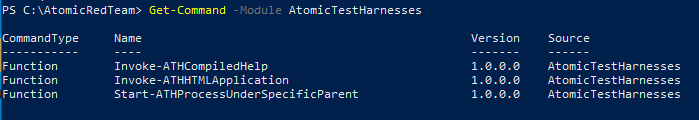

As is the case with any new PowerShell module, you’ll want to see what functions are available. To do that, run the following:

Get-Command -Module AtomicTestHarnesses

This will list out all of the available functions that you can interact with. You may notice the naming consistency. Each function noun is prefixed with ATH (the abbreviation for AtomicTestHarnesses) so that each function is clearly associated with the module. The noun that follows will then be a descriptive representation of the technique that is automated. Supposing we want to invoke HTA functionality, we’ll want to read up on its documentation. The Get-Help command will provide that documentation:

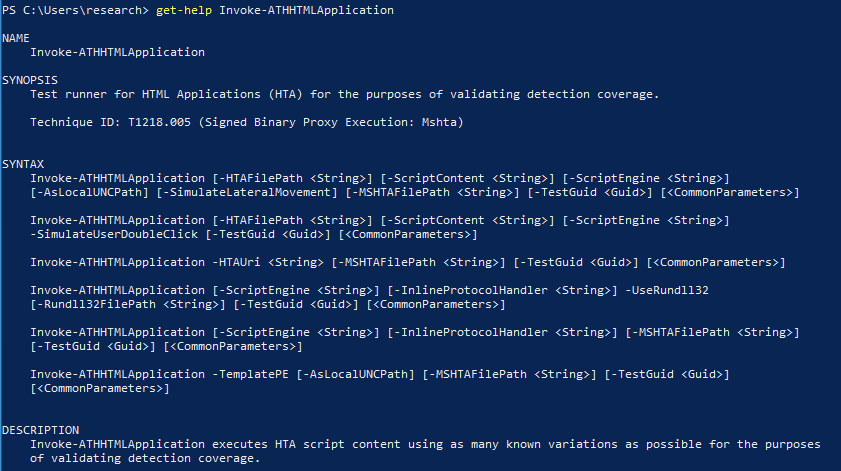

Get-Help Invoke-ATHHTMLApplication -Full

The following video illustrates what this will look like in practice:

Every function is thoroughly documented and will include, at a minimum, the following information:

- A brief description of what the function does

- The relevant MITRE ATT&CK ID

- Documentation of all supported parameters

- A description of the object the function outputs

- Relevant usage examples

Even without diving into the documentation, our goal as maintainers is to make getting up and running as easy as possible. As such, we will always strive to incorporate default behavior when no arguments are supplied. For example, running Invoke-ATHHTMLApplication without arguments might yield the following output:

| : TechniqueID | : T1218.005 |

| : TestSuccess | : True |

| : TestGuid | : 7f6921aa-a5fb-483e-91a3-4160e5e695e6 |

| : ExecutionType | : File |

| : ScriptEngine | : JScript |

| : HTAFilePath | : C:\Users\TestUser\Desktop\Test.hta |

| : HTAFileHashSHA256 | : 426A0ABF072110B1277B20C0F8BEDA7DC35AFFA2376E4F52CD35DF75CF90987A |

| : RunnerFilePath | : C:\WINDOWS\System32\mshta.exe |

| : RunnerProcessId | : 6716 |

| : RunnerCommandLine | : “C:\WINDOWS\System32\mshta.exe” “C:\Users\TestUser\Desktop\Test.hta” |

| : RunnerChildProcessId | : 328 |

| : RunnerChildProcessCommandLine | : “C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe” -nop -Command Write-Host |

Our goal is to present enough context that you will understand what’s happened, even if you lack expertise in a particular technique, and also to ensure that there’s a consistent experience across all exported functions. You can expect to see the following object outputs for any function:

- TechniqueID: the MITRE ATT&CK technique ID that corresponds with the function. It is important to be aware of the specific technique that the test corresponds with so that there is no ambiguity.

- TestSuccess: A value of `true` will indicate that the specific technique executed without issue. Most AtomicTestHarnesses functions will include benign template “payloads” that execute the technique-specific functionality and report back to the test code that they executed successfully. This way, you can trust that if `TestSuccess` is `true`, then any subsequent component in your detection validation pipeline can safely assume that the technique actually executed successfully. Having such confidence can dramatically reduce root cause analysis time when diagnosing test versus detection failures.

- TestGuid: This identifier is unique to every test execution. We feel that it’s important to be able to associate a unique identifier with each test run so that it is possible to link the test you ran to subsequent components of a testing/validation pipeline. Every function implements a -TestGuid parameter and you can manually input your own GUID if you want to be consistent about the output.

All remaining object output will pertain to the specific technique. Our goal with object output is that it supplies defenders, detection engineers, and test engineers with all of the relevant information they would require to both confirm event telemetry and validate detection logic. Generally, we strive to capture the “what” and “how” behind each test run. In the case of HTA execution, HTA content can contain embedded Windows Script Host (WSH)—e.g. VBScript, JScript)—script code and execute it. In designing a function that automates this process, we would ideally want to capture the following “what” and “how”:

- What did the HTA execute? (i.e., the WSH script code)

- What was the HTA runner? (i.e., the host process that executed the HTA)

- What relevant operating system artifacts were introduced?

- How did the HTA execute? (i.e., there are many different ways to invoke HTA content. Which variation was used?)

After running one of the AtomicTestHarnesses functions without arguments and after reading through the contextual object output, you may then want to investigate the suite of parameters that are supported to invoke all identified variations of the technique. You can begin to get a sense of the test variations possible by running Get-Help, which will return the following output:

Again, in practice, running the Get-Help command will look like the following:

When you see multiple options like this, it means that the PowerShell function/cmdlet has multiple parameter sets implemented. A parameter set is a set of parameters that are mutually exclusive from other sets of parameters. We try to design each parameter set to comprise all available technique inputs for every identified technique variation. Based on open source and internal research, we identified the following technique variations for HTA execution (note that there will be six listed in line with the implemented parameter sets):

- HTA execution from a file using

mshta.exeas the host runner - HTA execution from a file simulating a double click. HTA files are a common phishing vector so we wanted to replicate invoking the default file handler for HTA files

- HTA execution by downloading from a URI directly using

mshta.exeas the host runner. This variation is very commonly used in the wild. - Inline execution using protocol handlers using rundll32.exe as the host runner

- Inline execution using protocol handlers using

mshta.exeas the host runner - HTA execution from a file embedded within an executable (i.e., polyglot) using

mshta.exeas the host runner

Considering all the implemented technique variations, you could spend a while manually experimenting with all the different options, and we certainly encourage you to do so. However, what if you wanted to test all supported variations in order to validate visibility across your detection pipeline? Keeping track of all possible permutations is challenging, and executing everything manually is time consuming and error prone. Fortunately, with each new AtomicTestHarnesses release, we will supply Pester tests that automate and validate the process of executing all implemented variations.

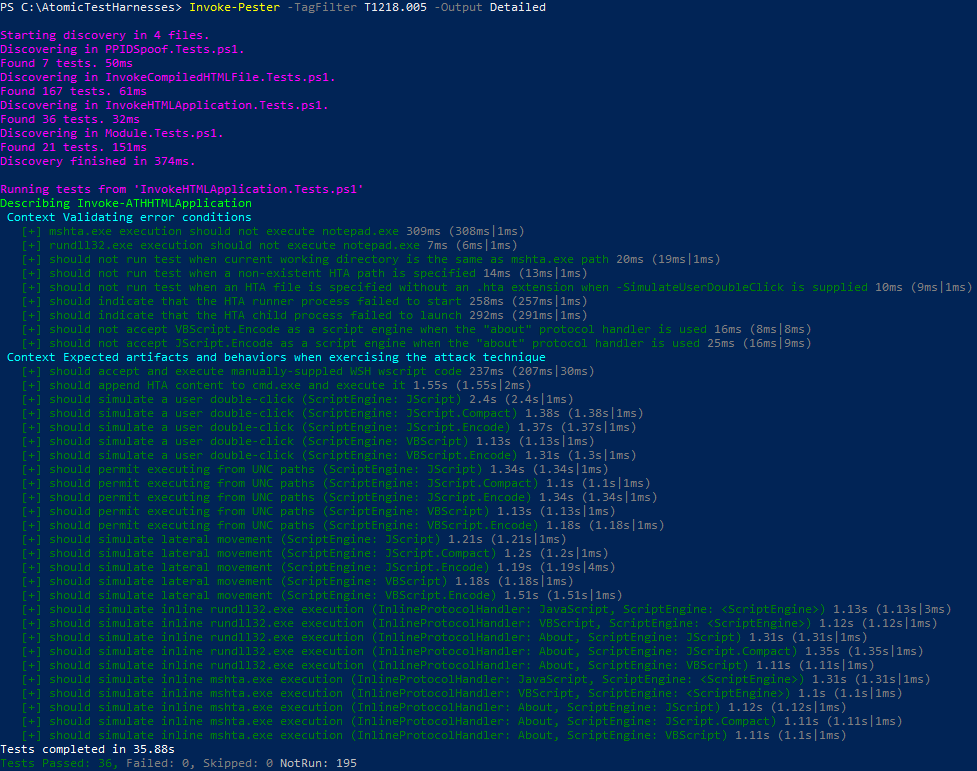

Automatically validating technique execution

Pester is the official testing framework for PowerShell, which made it a natural choice for both testing and validation of AtomicTestHarnesses functionality. What’s great about Pester is its ability to reason over expected behavior, something that Atomic Red Team and Invoke-Atomic were not designed to do.

Considering it may not always be obvious what the expected results for a specific test should be, it is our opinion that the developers of the test harness code should capture and validate expected results. All exposed functionality in AtomicTestHarnesses will come with Pester test code to automate the process of execution and validation. Each test suite is also tagged accordingly using the relevant MITRE ATT&CK technique ID. With our test code, we strive to implement at least one test for every parameter-set permutation. Think of this approach as attempting to maximize code coverage in the traditional unit-testing sense, but, in our case, we’re striving to maximize “detection coverage” by supplying the same level of modular input that an attacker would have when leveraging a technique in an evasive manner.

The above image shows the results of a Pester test for a single function. Given that green text is an indication of success, how do we interpret the results? For starters, a passing test will signify that the technique executed successfully and that the test generated the expected artifacts. With confidence that everything executed as expected, how you interpret the results will be entirely up to you.

If testing endpoint detection and response (EDR) sensor optics, you would likely want to take the output of each test and, through some sort of automated process, validate that your EDR platform observed (note: “observe” as opposed to “detect”) every instance executing. If, for example, an EDR platform does not have optics to support observing every test instance, then it can be inferred that it won’t have the ability to detect suspicious behaviors that run on top of the underlying technique.

For more information on running Pester tests with AtomicTestHarnesses, please refer to the documentation. There’s a lot more to cover about testing philosophy and methodology, but we’ll leave that further deep dive for another day.

AtomicTestHarnesses meets Invoke-Atomic

We have also committed the base parameter sets for each AtomicTestHarnesses as an Atomic test that may be consumed by Invoke-Atomic. To get started, follow the standard procedure to install Invoke-Atomic and download the atomics directory. The following video demonstrates this process as well:

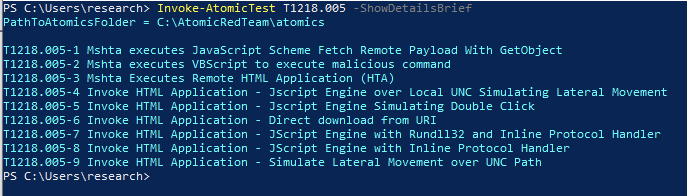

Following our example of using Mshta T1218.005, let’s confirm the new T1218.005 has downloaded by running the following:

Invoke-AtomicTest T1218.005 -ShowDetailsBrief

Test numbers four through nine will use the Invoke-ATHHTMLApplication function, and we can confirm that by reviewing the details of the test number itself with the following command:

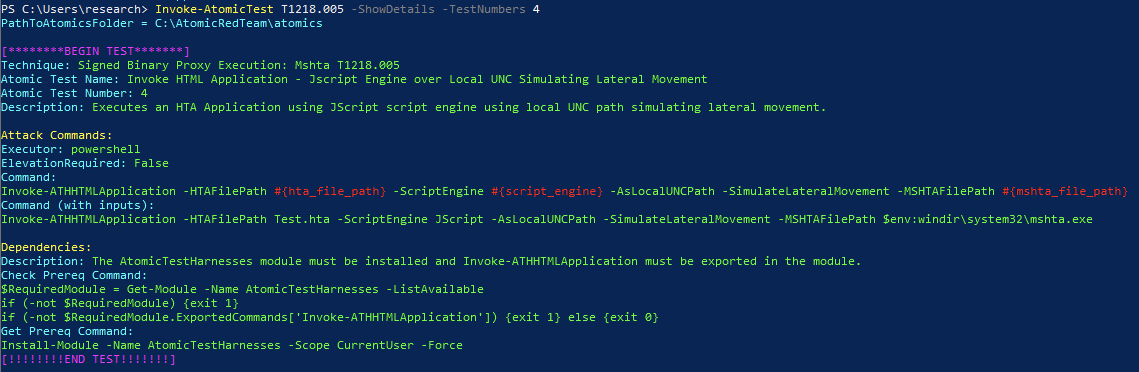

Invoke-AtomicTest T1218.005 -ShowDetails -TestNumbers 4

We see under “Dependencies” that Invoke-Atomic is validating whether AtomicTestHarnesses is loaded or not. If AtomicTestHarnesses is not present, running -GetPrereqs for the atomic T1218.005 or the specific test number will finalize the setup by installing the PowerShell Gallery module.

Now we can execute all or a single test. Here we’ll run a single test with Invoke-Atomic:

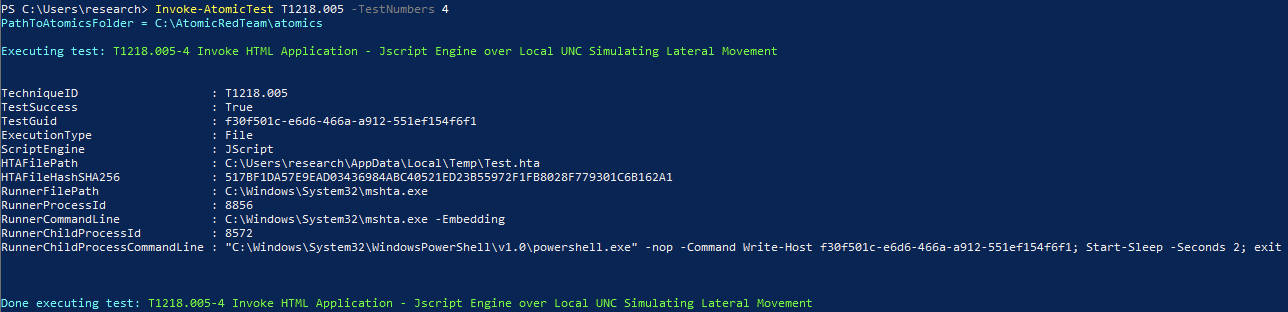

Invoke-AtomicTest T1218.005 -TestNumbers 4

If you’re running the test on your own, it should look like this:

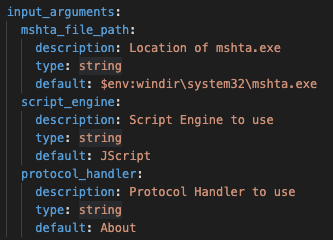

As testing commences and validation is being completed, we may want to create or modify current tests to extend our testing capabilities. Fortunately, it’s as simple as modifying the input arguments.

By modifying the input arguments on a single test, we are able to exhibit a new behavior.

Now it’s your turn

Now that you know what AtomicTestHarnesses is and how to use it, it’s time to take this tool into your environment and figure out if your detection analytics stand up to the many variations on

- T1134.004: Access Token Manipulation: Parent PID Spoofing

- T1218.001: Signed Binary Proxy Execution: Compiled HTML File

- T1218.005: Signed Binary Proxy Execution: Mshta

For our part, these tests have helped us develop more robust coverage against a wide array of threats while also validating the efficacy of our existing coverage.

This is just the beginning for AtomicTestHarnesses. We’ll be hosting a deep dive into the tool this coming Friday, October 30 at 11 am MT as part of our ongoing Atomic Friday webinar series.

While AtomicTestHarness isn’t currently accepting community-contributed test harness code, we hope to change that once we feel confident that we’ve educated the community about the design and implementation process. In the meantime, check out the repo, run some tests, and fire away with pull requests for any bugs you might find.

In part two of this blog series, we’ll explain in great depth our research methodology for identifying relevant variations on a given technique, and how that work ultimately led us to the creation of AtomicTestHarnesses.