Trend

Adversary emulation and testing

More than a quarter of Red Canary’s customers performed some kind of testing in 2023.

Pairs With This SongThreat sounds

Long live ART. Long live BNL.

Threats attributed to testing represented approximately 24 percent of malicious and suspicious classified threats that our team detected in 2023, nearly 6,000 in all. These threats include purple or red team activity, adversary emulation tools and platforms, and more.

In this section, we’ll look closer at the types of organizations performing these tests, along with the threats, tools, and techniques that we detected.

How Red Canary identifies testing activity

Once we’ve detected, investigated, and alerted a customer to a threat, our platform provides them features for offering feedback, including the ability to signal whether a threat has been remediated—or will not be remediated. If a threat is not going to be remediated, it’s important that we know why:

- The activity and risk will be accepted

- The activity is authorized

- We incorrectly identified the activity as malicious, a false positive

- The activity is attributable to some form of adversary emulation or testing

Of note, this data depends on a customer confirming that a threat was related to testing. So, we expect that more testing took place than was confirmed, meaning that many of these statistics should be looked at as minimums.

Who’s testing the most

A promising trend, 27 percent of Red Canary customers engaged in some form of testing, including purple or red team activity, at some point throughout 2023. What’s more, this activity was observed across organizations of all sizes, ranging from those with hundreds of employees to those with many tens of thousands.

By industry, customers in financial services topped the leaderboard, accounting for 25 percent of all testing activity, followed by professional, scientific, and technical services with 12 percent and healthcare with 10 percent. That’s nearly 50 percent of testing activity across just three industries. The manufacturing industry just missed a podium appearance this year. Read the Industry and sector analysis section of this report for more insights related to customers by industry.

At the organization level, a dozen customers across industries performed over 100 tests distributed throughout the year, with a single customer performing over 500 distinct tests.

Top tested threats

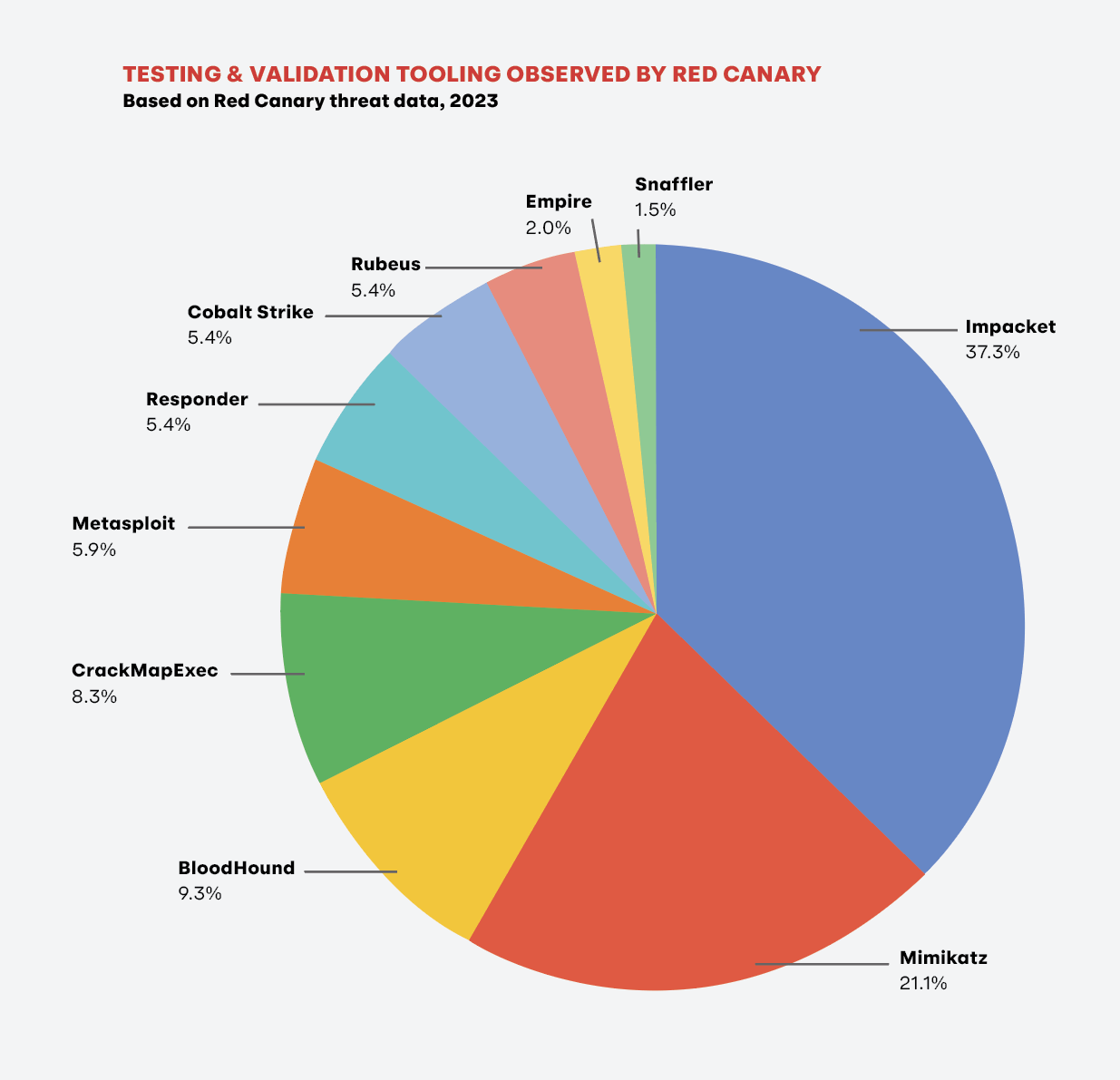

The top threats that customers and their teams tested are representative of the threats that we observe in the wild. In fact, there’s a close correlation between not only the set of threats, but also their ranking. Tools like Impacket, Mimikatz, BloodHound, and more are highly prevalent in real-world incidents, appearing frequently atop our monthly Intelligence Insights, and our testing data shows that customers are paying attention and putting their technology, their teams, and our own security operations team through the paces.

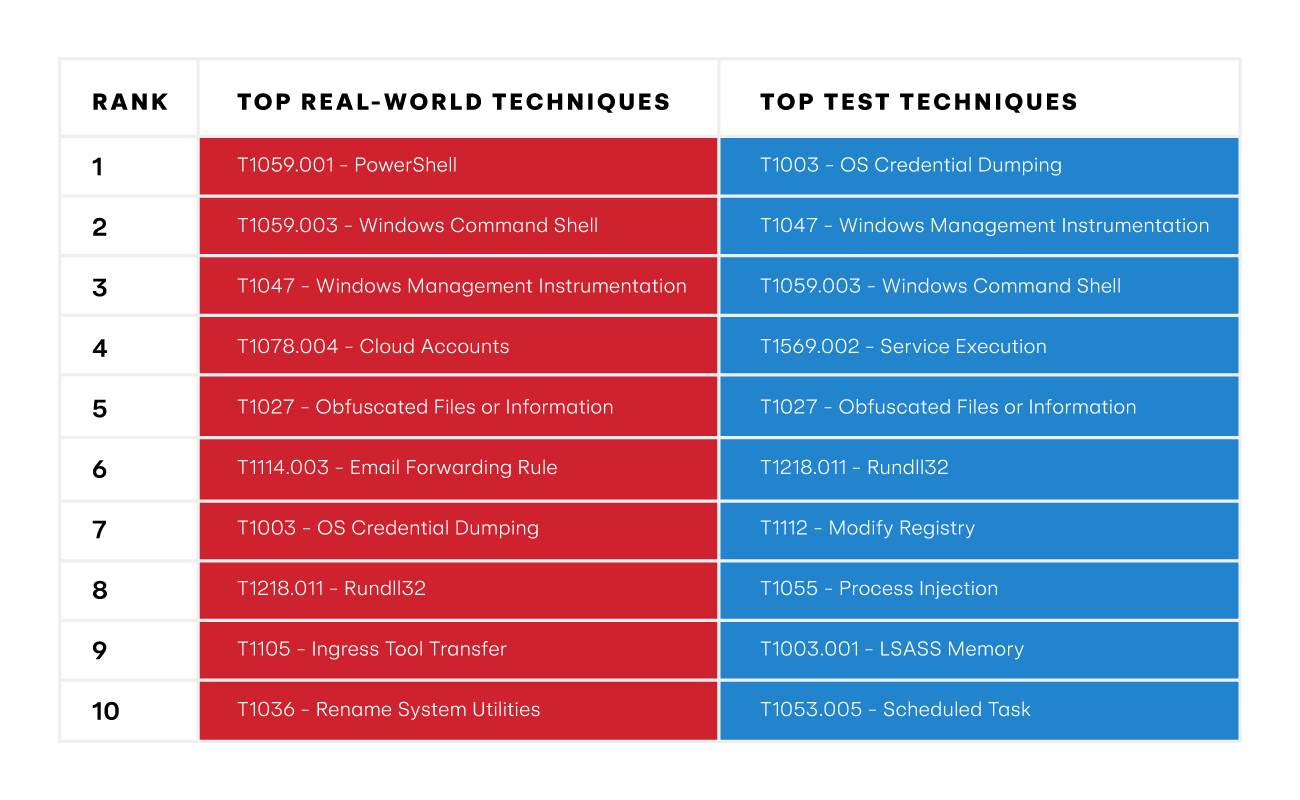

Top tested techniques

Take action

This analysis highlights the increased prevalence of testing across organizations, irrespective size or industry. The quality of open source threat intelligence coupled with increasingly capable tools for adversary emulation mean that every organization should be testing their defenses regularly, even in the absence of broader investments in cybersecurity and incident readiness.

A simple plan that organizations can adopt:

- Subscribe to sources of high-quality threat intelligence, such as the Red Canary blog.

- Identify prevalent threats, keeping an eye out for prominent initial access vectors in particular.

- Decompose threats into the component techniques and procedures that they leverage.

- Use Atomic Red Team, Invoke-Atomic, and other freely available tools to step through these techniques, or emulate more complete adversary behaviors.