Our customers often ask us to help them understand trends, ranging from emerging threats to how we see the security product landscape evolving. A customer CISO recently asked how we envision artificial intelligence (AI) and technology like ChatGPT impacting the malware ecosystem and by extension, our ability to keep pace as defenders. We decided to share the resulting discussion with a wider audience.

AI is changing how we build software (and malware is just software)

Much has been made of the impacts of AI on how we build, evolve, and understand software. And malware is just software, albeit with few (or no) redeeming qualities.

Here’s a non-exhaustive list of use cases for AI as it relates to software development, how it may relate specifically to malware, and the impact it might have on defenders:

Making software from scratch

Generate a Python script that listens for requests on TCP port 4000 and responds with the string redcanary.

This could expedite the production of malware, leading to a preponderance of new malware families. While this presents a challenge to defenders in that there could be more malware emerging more frequently, our experience with detection engineering is that the detection analytics that are effective at catching today’s threats are often effective at catching tomorrow’s threats as well.

Morphing existing software

Rewrite this Python program so that it is functionally the same but the code isn’t identical.

Ultimately, this capability isn’t fundamentally different than the crypters that malware creators have been leveraging for years to evade signature based detection technologies and methods.

Understanding how an existing piece of software works

Describe what this Python method is doing in plain language.

This use case for AI may actually benefit defenders more than it would benefit adversaries, as it will allow them to better understand what a specific strain of malware is designed to do.

Changing the functionality of an existing piece of software

Add a method to this program that does X.

This could make malware more dynamic and modular, offering more flexibility to adversaries. This could present a challenge for defenders, particularly in incident handling and response, as they attempt to classify malware and understand its purpose and impact.

Porting an existing piece of software to a different language

Recreate this Python module and its functionality in Go.

Like the previous examples, being able to quickly translate malicious code across different programming languages will offer a new degree of flexibility to malware authors, potentially challenging defenders to create new compensatory security controls.

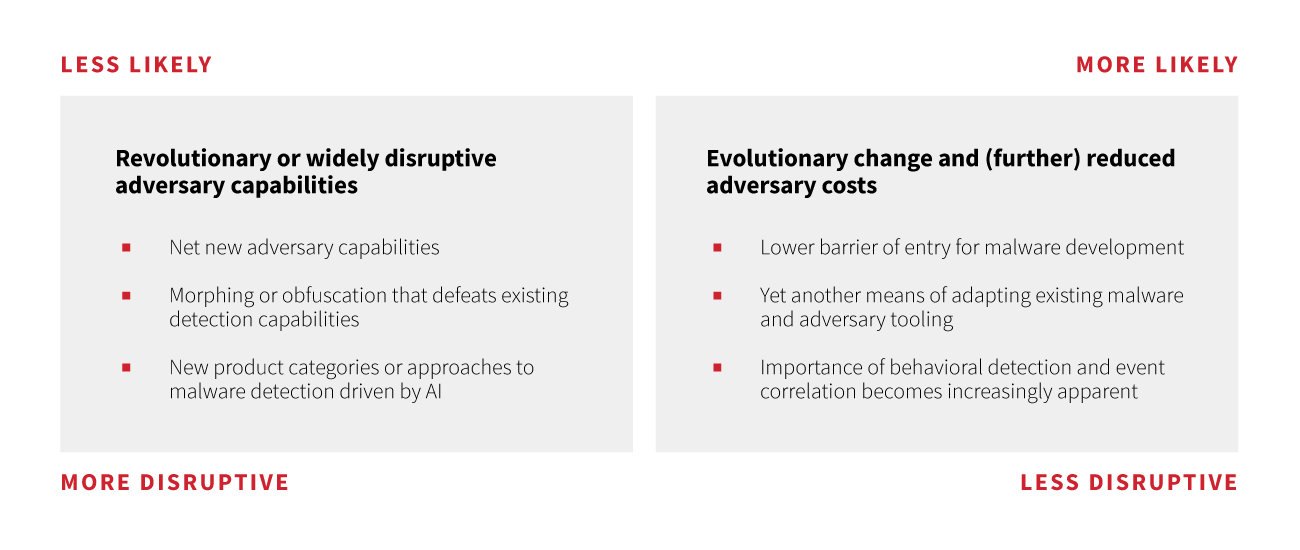

We’ll use these use cases to frame how we expect AI to affect the malware ecosystem, including some things that seem less likely to materialize soon (horizon concerns), but also some ways that we expect adversaries to leverage AI to further reduce their costs while increasing effectiveness.

The big worry: Using AI to develop net new malware capabilities

It seems unlikely that we will see AI used in the near future to create truly new or novel malware capabilities. When we talk about capabilities in this context, we mean the implementation of a feature or functionality that has not been heretofore observed (yes, there’s plenty of malware that industry at-large has not observed—this is a different discussion unrelated to AI). The implication here is that AI would enable the creation of software that an unassisted human previously could not devise or implement on their own.

The justification for this thinking is two-fold: First, it is possible but not likely that AI will lead to discovery of new software attack paths. In the context of malware, this would mean identifying new means of gaining execution, moving laterally from one system or platform to another, and more. Second, malware generated or modified by AI cannot change the characteristics of target platforms any more than human adversaries. AI may change how malware works, but it can’t magically change what malware needs to do to help the adversary accomplish their objectives.

Of all of the potential use cases, the use of AI to devise new malware capabilities would be among the most concerning, but also the least likely. AI will absolutely be used to create new malware. AI is being used to create new malware today. But AI is not creating new malware that redefines the state of the art, or that renders existing defensive approaches ineffective.

The more likely impact: Reducing adversary costs

Adversaries’ modus operandi is trying a bunch of things, and where they are able to identify something that works for them (i.e., something that isn’t responded to effectively by defenders), they have found some runway and will capitalize on it. And because adversaries are running businesses, they don’t want to do things that are more costly when a less costly solution will work.

Everything from repurposing of offensive security tools to inefficient software update systems to poor communication of vulnerability information (and more) contributes to reduced costs to adversaries. The near-term effect of AI on the malware ecosystem will likely be the same: It will be yet another tool they use to tweak, experiment, and generally evolve malware with the goal of evading defenses.

AI-morphing malware

What it means to morph something is heavily contextual. For purposes of this discussion about malware, we take morphing to mean changing the character or attributes of a piece of malware but not altering its capabilities or functionality in any way. Morphing malware, by any means, is done to make fingerprinting, blocking based on a variety of static indicators, and other types of traditional detection or mitigation difficult. And a specific concern that we’ve been asked about is whether we expect to see AI-morphed malware, and in particular whether we have any indication that we expect AI-morphed malware to challenge existing defensive paradigms or detection capabilities.

The application of AI to morph malware is an unfortunate use case for the technology. However, as defenders we’ve long been accustomed to adversaries using a broad and ever-evolving set of tools and systems to take an existing malware payload and produce countless variations to complicate specific defense measures.

- Early implementations used compiler configurations or changes to produce unique outputs given the same code as input. These can be effective but don’t scale particularly well.

- Packers and crypters—which typically encrypt, compress, or otherwise obfuscate files—are regularly used to reproduce functionality while minimizing the signatures or indicators carried over between builds to build systems that are designed by adversaries to make many binary files with identical functionality but different signatures.

- More recently, we’ve seen things like the ransomware-as-a-service (RaaS) ecosystem implement systems that use a variety of techniques to produce custom malware, configurations, and other tooling that are unique to each victim, and they are doing it at significant scale.

Importantly, morphing malware is only intended to defeat superficial means of detection. The techniques that threats abuse, in aggregate, don’t change much. Anyone performing detection based on behaviors will easily detect one, one hundred, or thousands of pieces of malware that eventually exhibit one of a relatively limited number of observable events.

So, while morphing malware is always somewhat of a concern, it is unlikely that AI will enable novel techniques for doing this that are more scalable, effective, or difficult to detect than any of the techniques that adversaries have been using for decades.

Using AI to understand and adapt malware

The difference between morphing malware and adapting it depends on whether the new piece of software has unique features or functionality. And the first step in adding new functionality is understanding the malware’s current state. One potential use for AI that is related to reducing adversary cost is that it may lower the barrier of entry for understanding, and then changing, the malware’s code and functionality.

We’re far past the point that adversaries need to be expert, or even experienced computer scientists. The “as a service” ecosystem has enabled a generation of adversaries that need to understand business, customer service, and victims’ various points of vulnerability more than they understand computer science.

One thing to watch as it relates to AI is whether this new technology further enables adversaries to adapt or improve upon capabilities without any programming experience. Optimistically, AI tools may also be a boon to defenders, making it easier for analysts with less software development experience to understand new malware.

Porting software to new languages or platforms

An expectedly less common but more impactful type of change would be porting entire programs, large or small, to new languages or platforms.

As a brief aside, it’s helpful to understand aspects of how we as defenders gain leverage when it comes to threat detection, and a good part of this story is related to languages or runtimes. Take PowerShell for example: This is a powerful programming language, and arguably a platform unto itself. There are myriad legitimate uses for PowerShell, and conversely there are limitless ways to abuse PowerShell at virtually every stage of an intrusion. This may sound despairing, but whenever an adversary invests heavily in any given toolkit, defenders are presented with an opportunity. We can watch PowerShell closely, focus our efforts on improving our understanding of related tradecraft, build ever-improving baselines, and double down on detection coverage and testing. This takes time, but it’s a worthwhile investment that applies pressure to the adversary.

Should we see AI used to port any substantive malware or set of tooling to a new language, we now need to gain a deep understanding of, and implement robust observability, detection, and mitigation, for a new set of interpreters, dependent subsystems, and potentially on multiple platforms at once. Assuming that functionality has not changed at all, an entirely new and potentially expansive set of moving parts, artifacts, and adversary opportunities must now be understood and accounted for.

This portability concern surely exists today, although we’re not yet aware of any of the largest, most complex, or most impactful adversary tools being re-implemented in this manner. Whether this comes to pass in a manner that goes beyond porting of individual or standalone tools remains to be seen, but this particular “misuse case” for AI is a worthy thought experiment for mature detection systems and teams:

- Could you respond to this today?

- What changes to your existing systems would be required to respond to this type of change, and how much time would be required to react?

- Can you take proactive, cost-effective steps to prepare for this eventuality today?

Key takeaways and open questions

The most apparent and likely impact of AI on the malware ecosystem is centered around reduced adversary costs, as this new technology is leveraged to further increase the pace of incremental or evolutionary changes to malicious software. However, this is not to say that we’re out of the woods. There are absolutely a number of open questions, emerging use cases, and clever implementations being considered.

As defenders, AI will present us with some challenges, but it will also present us with opportunities. Some potential opportunities include:

- AI could lead to a rise in lower quality malware that doesn’t work properly, because the adversary wasn’t smart enough to notice or is leveraging AI in a manner that doesn’t optimize for quality.

- Artifacts introduced by specific AI technologies or workflows could result in detection, attribution, and mitigation opportunities.

- The same aspects of AI used by adversaries to reduce their costs are, of course, available to defenders!

From a defender’s perspective, the single most important thing to keep in mind is that while AI affords the adversary many advantages, AI is still subject to most or all of the same constraints as a human adversary. Importantly, AI can’t change how targeted platforms work and the primitives that dictate how software behaves and can be observed. And the stages of an intrusion from initial access and beyond, including identified tactics and techniques, are still required for the adversary’s success. Thus, our investments in observability, deep behavioral detection, and detection opportunities related to highly prevalent techniques will continue to effectively defend against humans or bots.

While AI affords the adversary many advantages, AI is still subject to most or all of the same constraints as a human adversary.

In terms of challenges that we can expect, there are a wide variety of targeting, reconnaissance, and early-stage adversary activities that make the eventual delivery of malware more effective, irrespective of whether AI is leveraged in the creation or modification of the malware itself.

- Synthesizing target research, for instance automatically correlating employers, colleagues, projects, and other public research

- Generating highly compelling social engineering lures could improve click-through rates on phishing and website lures (see WormGPT)

- Voice-based AI may be used to scale fraud as well as early-stage attack tactics that require some level of “human” interaction, such as using phone calls to convince victims to provide sensitive information in a standalone manner or in conjunction with text-based lures.

Lastly, and perhaps most importantly, we should expect both the cybersecurity and criminal communities to continue to cobble together components of these and other use cases in novel ways, with the goal of vertically integrating the various phases of an attack into comprehensive attack frameworks.