Cloud applications are essential for modern productivity but how today’s employees access them can introduce subtle security risks. One of the more significant yet often overlooked threat vectors involves OAuth, the industry-standard for authorization that grants apps access to your data without sharing your password.

While it seems convenient, a malicious OAuth application can become a persistent backdoor into your organization’s most sensitive information.

OAuth stands for open authorization, not authentication; this distinction is important. Authentication verifies who you are, while authorization determines what you’re allowed to do. When you consent to an OAuth app, you’re not just logging in; you’re handing over a set of keys, in the form of tokens, which prove your identity and grant the application-specific permissions to act on your behalf.

In Azure, users and administrators can grant OAuth applications access to resources managed by or protected by Microsoft Entra ID. Entra ID is the authentication server that handles user’s information and issues tokens to grant and revoke access. If a user is ever tricked into authorizing a malicious app however, adversaries could maintain that access even if the user’s password is changed.

Let’s break down how these attacks work in the real world and what you can do to stop them.

Anatomy of a real-world Azure OAuth attack

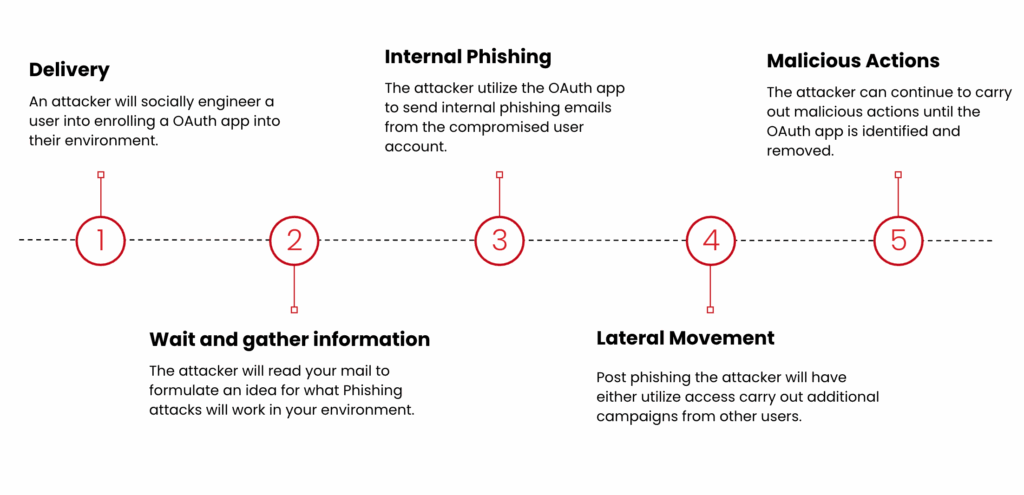

Red Canary’s Threat Hunting team recently investigated an incident that illustrates how stealthy and patient an OAuth application attack can be. The campaign unfolded as follows:

Delivery

In this particular incident, the organization onboarded with Red Canary after the malicious application was deployed. The attack appears to have started with a classic social engineering lure, however. Our analysis suggests that an employee likely received a phishing email promoting a new AI application designed to improve their workflow. The user clicked the link and was likely presented with a legitimate-looking Microsoft consent screen asking for permission to access their account. They accepted.

Dormancy and reconnaissance

For the next 90 days, the malicious application did nothing overtly harmful. Instead, it sat dormant, using granted permissions (like Mail.Read) to learn. It analyzed the user’s mailbox, studying communication patterns, common subject lines, and internal conversations to understand the business’s rhythm and the user’s language.

Internal phishing

After its reconnaissance phase, the app launched a highly targeted internal phishing campaign. Because the emails were sent from a trusted, compromised internal account and mirrored the language and topics employees were used to seeing, the campaign was incredibly successful.

Lateral movement

The internal phishing wave led to a number of compromised user accounts, allowing the adversary to move laterally throughout the environment.

Following Red Canary’s analysis, the most critical takeaway from the attack is that traditional remediation steps like resetting user passwords and revoking active sessions are ineffective against this threat. The adversary’s access is tied to the OAuth application’s permissions, not the user’s password. Until the root cause—in this case the malicious OAuth application itself—is identified and its permissions are revoked, the attack chain remains unbroken.

How to hunt for malicious OAuth apps

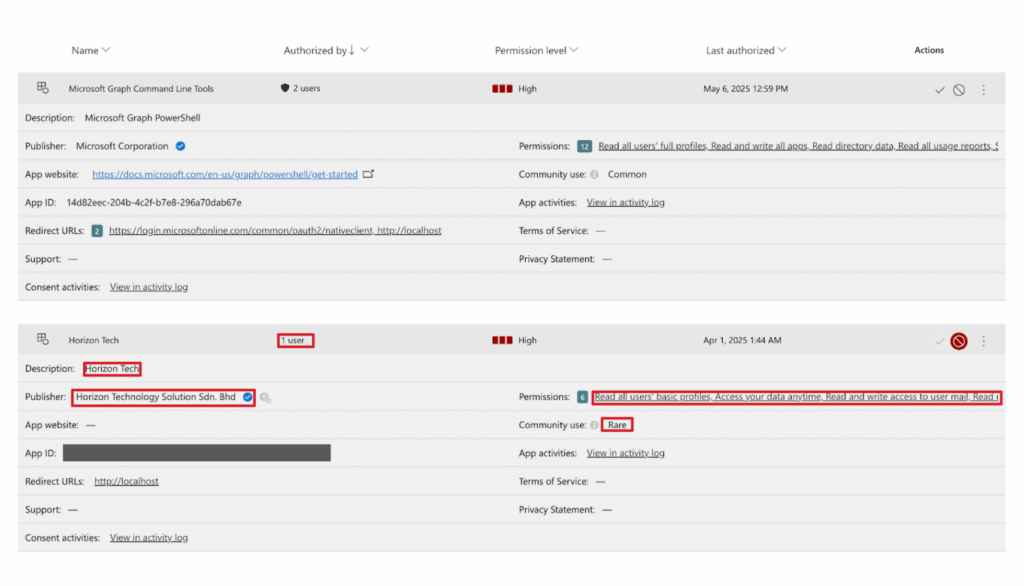

Distinguishing a malicious OAuth app from a legitimate one requires an eye for detail. If you have Microsoft Defender, you can start by auditing applications in your Defender portal.

You’ll want to review the name of the app, which can always be spoofed or trojanized, the publisher, and its permissions. You’ll also want to pay attention to the app ID and who it’s been authorized by to track its use through your environment.

In the incident that Red Canary’s Threat Hunting team uncovered, the malicious app’s publisher, Horizon Technology Solution Sdn. Bhd, had a blue checkmark, as seen in the image above, suggesting it was either compromised from the get-go or from a publisher that managed to slip through the verification process.

A “rare” community use is one of the strongest signals that an app may be custom-built for a targeted attack. While new versions of legitimate apps can sometimes appear as “rare” initially, this status combined with high-level permissions and a low user count in your organization warrants an immediate investigation.

When it comes to differentiating between a legitimate app and a suspicious app, here are some other key indicators to look for:

| Indicator | Legitimate app ✅ | Suspicious app 🚩 |

|---|---|---|

| Indicator: Publisher | Legitimate app ✅: Usually verified with a blue checkmark (e.g., Microsoft Corp.) | Suspicious app 🚩: Unverified, or verified but a compromised/spoofed name |

| Indicator: Community use | Legitimate app ✅: Common across other Azure tenants | Suspicious app 🚩: Rare. This is a major red flag |

| Indicator: Permissions | Legitimate app ✅: Scoped to what is necessary for it to function | Suspicious app 🚩: Overly broad and high-risk (e.g., |

| Indicator: Authorization | Legitimate app ✅: Used by many users across the enterprise | Suspicious app 🚩: Authorized by only one or a small number of users |

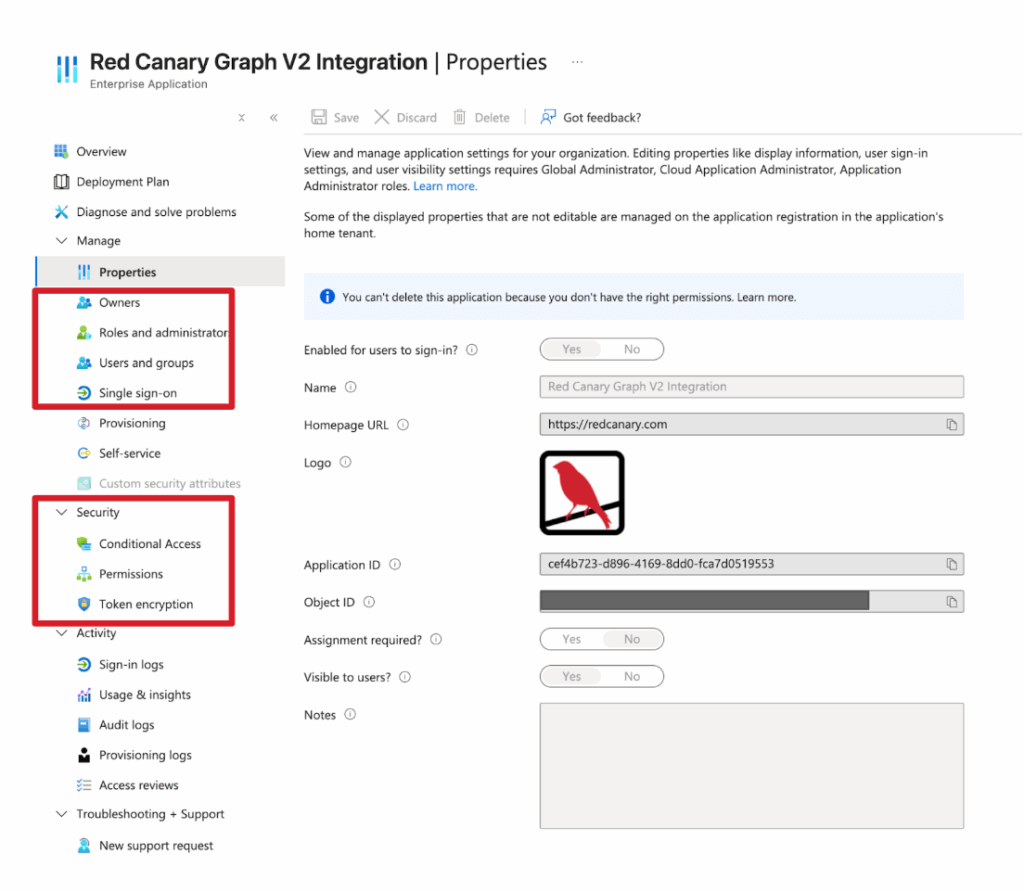

If you’re inspecting an OAuth application, its permissions and logs can be found within your Entra Application portal. Specifically, be sure to review the following:

- users and groups attributed to the app

- single sign-on status

- owners

- permissions

- conditional access

Double check what the app has access to and what it can do on behalf of users to get an idea of its scope. Look for anomalies, such as an application that has been dormant suddenly sending emails or accessing files. This behavioral change can be the tip-off that a dormant app has been activated for a malicious purpose like sending phishing emails.

Give extra scrutiny to artificial intelligence (AI) apps, many which claim to help users write and send emails but can often come with excessive and sometimes questionable permissions.

Strengthening your OAuth defenses

You can significantly harden your environment against these attacks by configuring your consent policies within Azure.

Disable user consent

The most effective defense is to block users from consenting to applications themselves. In the Entra ID portal, under Enterprise applications > Consent and permissions, you can configure user consent settings to “Do not allow user consent.”

Implement an admin consent workflow

To avoid disrupting business operations, enable the admin consent request workflow. This allows users to request access to a new application, which is then routed to administrators for review. This maintains security oversight while still allowing employees to access the tools they need.

Audit existing apps

Before locking down consent, audit all applications that have already been granted permissions in your environment. Revoke access for any unused, over-permissioned, or suspicious apps. Keep in mind that as an administrator, you are responsible for any data these third-party applications can access.

Hunt proactively

Use threat hunting queries, modified to fit your environment, to find potentially risky applications.

If you have Defender for Cloud Apps deployed and you operate in an environment where user consent is allowed, the following Defender query can help administrators sniff out malicious OAuth applications:

let Minimum_Users = 10;

OAuthAppInfo

| where AppStatus == "Enabled"

| where AppOrigin == "External"

| where ConsentedUsersCount > 0 and ConsentedusersCount < Minimum_Users

| where IsAdminConsented==0

mv-expand Permissions

By combining policy enforcement, proactive auditing, and user education, you can get a start on mitigating the risk of OAuth application attacks and ensure that convenience doesn’t come at the cost of your security.