In recent posts, we’ve gone behind the scenes with our detection engineering team to explain how we use detectors to improve the quality and efficiency of our threat detection operations. In this post, we’ll cover the creation of a detector: from the idea’s conception, to research and testing, to the moment it “comes to life” and is delivered into production.

First, a quick refresher…

What Is a Detector?

In its simplest form, a detector at Red Canary is a small chunk of Ruby code containing Boolean logic. As our engine ingests process execution metadata from Carbon Black Response or CrowdStrike Falcon, the metadata is matched against the Boolean terms to determine whether specific events are interesting enough to bring to an analyst’s attention. This little bit of logic is a powerful piece of Red Canary because any analyst can submit code to extend our detection capabilities based on adversarial techniques they’ve observed firsthand or through research.

So How Does a Detector Come to Life?

For illustrative purposes, we’ll walk through this process by following the implementation of Detector #1236, also known as WIN-PRINT-WRITE-PE. We developed this detector to identify when a built-in Windows binary named print.exe was being used to execute ATT&CK Technique T1105 (Remote File Copy) in order to download a payload to an endpoint.

Let the detector creation begin!

It Starts with a Spark…

Each detector starts as an idea posted as a GitHub issue from our detection team, incident handlers, or our trouble-making applied research team. Some of our analysts specifically work with threat intelligence to learn about new behaviors, but we also crowdsource within the organization to ensure we don’t miss out on ideas. Analysts submit ideas based on adversarial behaviors they’ve seen while working events at Red Canary or at a previous job; incident handlers submit for things they’ve observed at customer environments; and researchers look for new behaviors we can detect before adversaries start exploiting them.

In addition, Atomic Red Team contributions can help drive forward detector ideas. Casey Smith and other Atomic Red Team researchers submit interesting tests we haven’t seen in the wild. A good example of this is the contribution of the macOS Input Prompt test, which helped us improve our detection capabilities on macOS systems.

Finally, some detector ideas come from an unfortunate source: a detection miss. While this is rare, missing a malicious event is bound to eventually happen when processing terabytes of process execution metadata to look for adversary behavior that can change on a daily basis. When this does happen, it’s up to us to take responsibility for the miss and ensure it won’t happen again. These ideas teach us valuable lessons and lead to greater detection quality overall.

In the case of Detector #1236, the idea was submitted by one of our detection engineers after reading a Tweet from Oddvarmoe. The Tweet discussed print.exe, a binary included with Windows by default, and its ability to download items in a way that could evade detection. And thus, a detector idea was born.

Validating a Detector Idea

Once an idea has been documented as a GitHub issue, we embark on an adventure to determine whether forming a detection strategy around the idea will bring value to the detection engineering team. We guide this decision by looking at the likelihood and complexity of the adversary’s tactic combined with the data we can obtain from endpoint detection and response (EDR) sensors. Simple detector ideas like identifying Microsoft Word spawning PowerShell are easy wins because the tactic is so common and because we can easily detect these occurrences with EDR data. Sometimes this decision is also guided by limitations of EDR data. A good example of one such decision involved the use of cross-process communication to snoop for credentials in Linux. Unfortunately, we couldn’t obtain data to show this tactic happening and we needed to axe the idea. Some detector ideas require a lot more research to see how much they are used in the wild.

Our research often involves setting up a test lab to simulate adversarial behavior in a controlled environment. While you can spend thousands of dollars on setting up a lab environment for this purpose, I prefer to keep things simple: VirtualBox is free and easy enough to use. Some of our engineers prefer VMware Workstation or other virtualization technologies as well. More complicated detector ideas can involve setting up specialized lab machines including Active Directory domain controllers, SQL databases, or web servers. One detection project we recently undertook involved setting up numerous web server products on Linux and Windows to test how web shell behaviors appear in EDR data. Other specialized tests might also call for CrowdStrike Falcon or Sysmon sensors to test how telemetry differs between products.

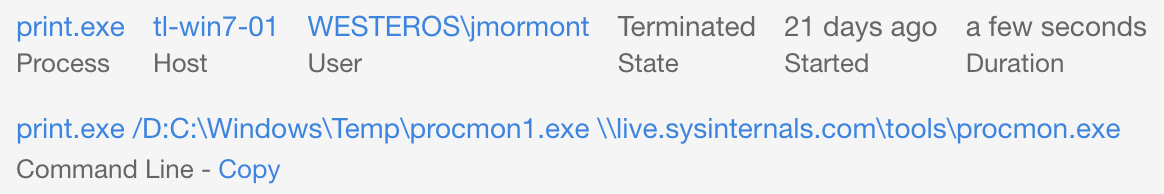

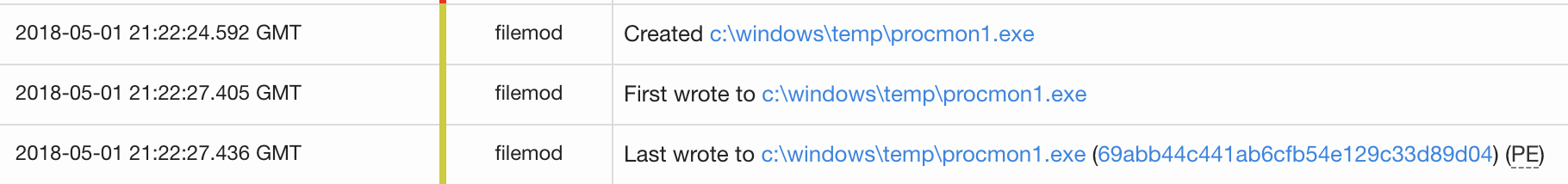

For this particular behavior of print.exe writing a binary to disk, a simple lab experiment using a single Windows 7 machine with a Carbon Black (Cb) Response sensor was used. After reading through the original idea in the Tweet, we used a similar command to test in the lab. Instead of going through a process to set up file shares to host files to download, we leveraged one of the tech world’s most famous WebDAV-enabled sites: live.sysinternals.com.

print.exe executed, we dove into Cb Response telemetry to observe artifacts left from the test. Interestingly, we observed a file write by print.exe but not a network connection. This was due to the nature of WebDAV downloads because the network connections for these are offloaded to another service in Windows. Instead, these connections are handled by a rundll32.exe process. In theory, a detection strategy for this tactic focused around identifying file writes would work as long as we didn’t focus on network connections.

But Will It Scale?

But Will It Scale?

You might be wondering why we focused on the delivery of binary files rather than others. After all, print.exe could potentially download any file presented in a WebDAV share.

The answer to this question focuses around the scaling of our detection systems. When we surveyed several customer environments, we discovered that print.exe was commonly used, but it often wrote temporary files to disk. If we only monitored a single customer (or if we were solely monitoring our own, like many organizations) we could probably tolerate the amount of alerts produced by this process writing any file. But because Red Canary has scaled to monitor many different customers across numerous verticals, we discovered that a slightly wider net could cause thousands of false positive alerts that would send our analysts down unnecessary rabbit holes.

While we embrace false positives in an effort to catch evil, we also need to keep the false positive rate to a reasonable amount to reduce alert fatigue of our detection analysts. Therefore, we hypothesized that the use of print.exe to deliver executable binaries would be more likely to be evil than the process of writing other file types.

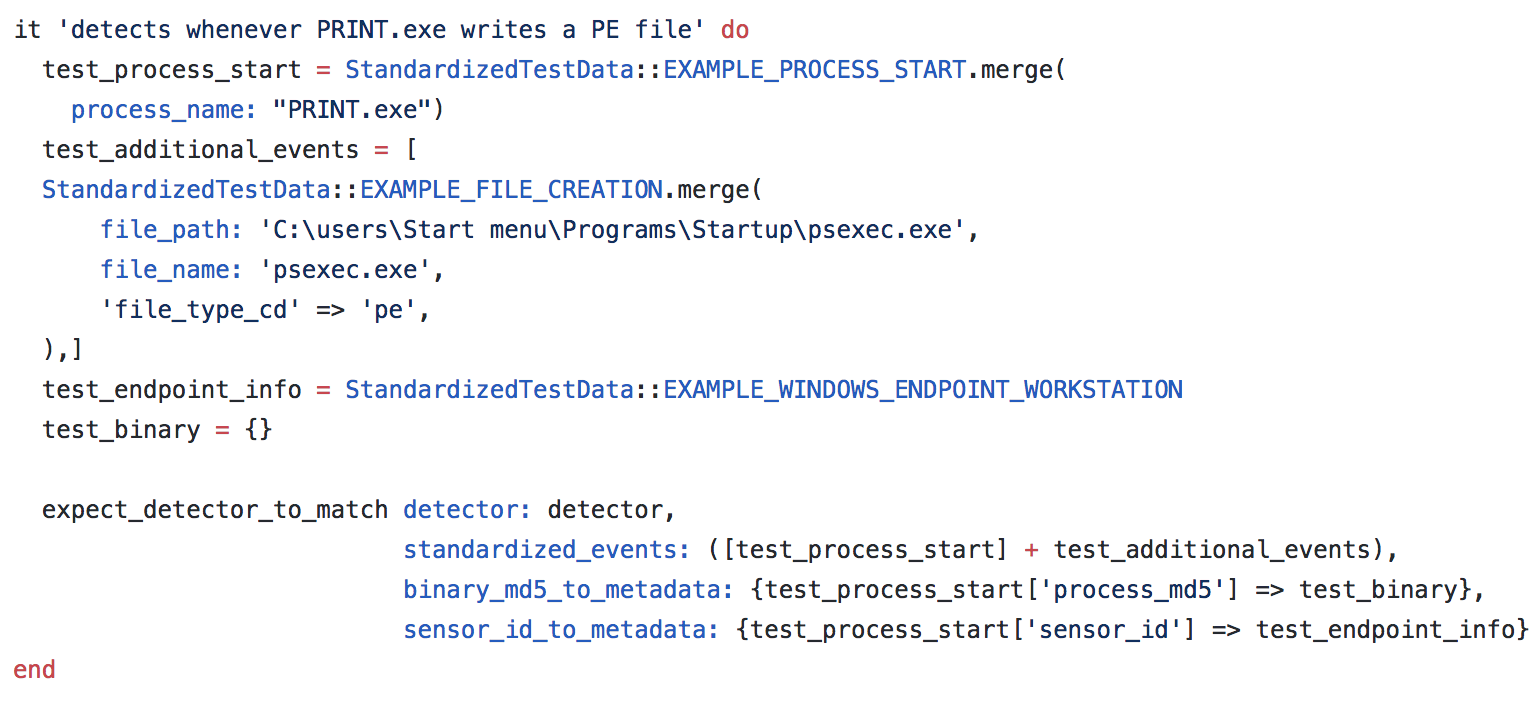

Test, Then Implement

Now that we’ve validated a detector idea and have a grasp of behaviors we deem interesting, we can start the process of writing a detector. In applying the concepts of engineering to detection, we start with creating a unit test for our final detector code to pass. We typically create conditions in each test to simulate true positive events as well as events we would expect to not trigger the detector.

In this case, our first set of conditions in the test look for a true positive event: one where print.exe executed and wrote an executable file to disk. Our test code looked similar to this:

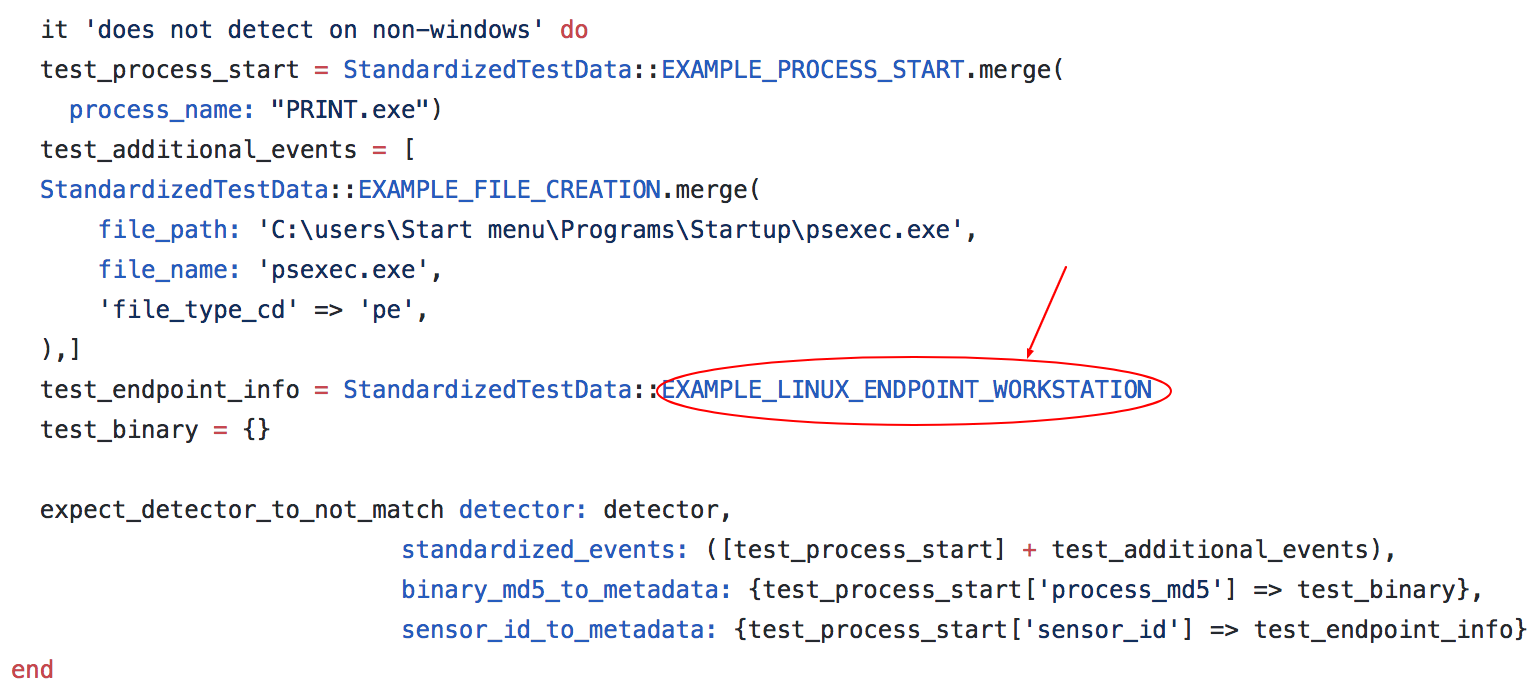

print.exe executed but had no executable file write. Any detector code written should not trigger this condition. Finally, we included another condition to test whether an event on Linux would trigger the detector. As print.exe is a Windows binary, we only want to look for its execution on Windows. Any code written in a detector should not trigger this condition either.

Can We Write Code Now???

Can We Write Code Now???

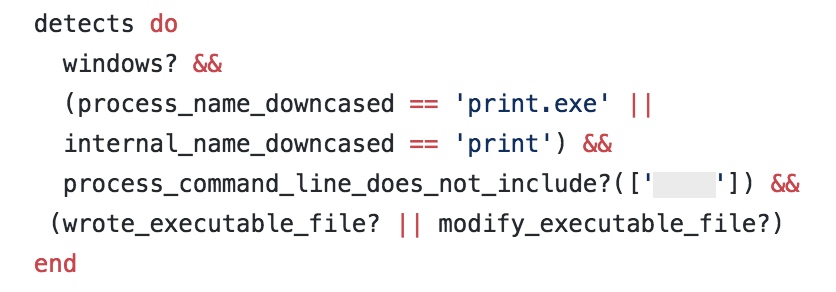

After investing time creating and validating a unit test, we can finally begin writing detector code! As mentioned in the earlier detection engineering post, we use domain-specific language created in Ruby to represent pieces of data gathered from our partner EDR sensors and standardized into the Red Canary engine. There is a wide encyclopedia of terms we’ve created for detectors, but the ones we want to focus on here are:

- Process_name_downcased

- Internal _name_downcased

- Wrote_executable_file?

- Modified_executable_file?

We wrote this code for the detector to pass our pre-made test:

Walking through the code, we instruct the detector to look for events on Windows where a process or internal name is that of print.exe. Additionally, the detector looks for a file creation or modification of an executable file. We’ve learned to look for both creation and modification of files over our experience with EDR sensors, as both Cb Response and CrowdStrike Falcon report file writes in different ways. Including both of these checks allow one detector to work for events from both sensors.

You may have also noticed an odd line of code: process_command_line_does_not_include. This line was included because another software package on Windows also includes a print.exe binary that is different from the one we wanted to identify. To exclude execution of the unwanted print.exe, we included this line of code. For any sharp-eyed penetration testers out there, we obscured the text in the image so we could talk about this code without giving advice for easy evasion!

Now that the detector code passed its unit test, we can move on to additional functionality surrounding the code. Each detector includes a public-facing description for customers and an internal notes section for our detection engineering team. The internal notes help the team learn about why a particular event was raised and how to investigate it. This helps us close the knowledge gap between the different team members while providing high-quality reports to customers.

It’s Alive!

We now have a functioning detector—so what’s next? We commit it into a version-controlled repository and request a peer review. Every detector at Red Canary is reviewed by at least one person other than its author. This process allows us to have reasonable checks and balances that prevent our team from making obvious coding mistakes or flooding the team with unnecessary events.

Once the detector passes peer review, it enters a tuning phase where we gather events triggered by the detector into Splunk to ensure it behaves as expected. After the initial tuning period, we make a decision to either move the detector out of tuning or revisit its code for adjustments. In some cases, like a detector for rundll32.exe application whitelisting attacks, we have to revisit the detector code and constantly tune it due to excessive false positives. In the case of Detector #1236, it fell below the reasonable false positive level and was pushed into production.

Improving the Future

Hopefully this post has given you good insight into the process we follow to extend our detection capabilities. I want to end with a call to arms. You (yes, YOU!) can help us improve detections, even if you’re not a Red Canary customer. We constantly consume articles and podcasts on adversarial techniques, so conduct research and publish your findings! If possible, submit tests to the Atomic Red Team on GitHub. Finally, if you’re a penetration tester or a red team for one of our customers, bring your A-game so we can learn and improve. Working together as a community, we can improve security for everyone.

Are you keeping up with modern adversaries? See how Red Canary can expand your detection coverage >>

But Will It Scale?

But Will It Scale? Can We Write Code Now???

Can We Write Code Now???