Red Canary has used artificial intelligence (AI) and machine learning (ML) for years at various stages of our detection engineering, alert enrichment, triage, and other security operations workflows. We’ve been experimenting with generative AI (GenAI) since early 2023, and we’re confident that when applied discerningly it will be more of a boon for defenders than for adversaries. In this blog I’ll discuss the foundations needed to realize security operations value from GenAI, a few ways we’re using GenAI to improve our security practitioners’ performances and job satisfaction, and where we’re going with the technology.

GenAI foundations: clear security goals, quality data, and experts in the loop

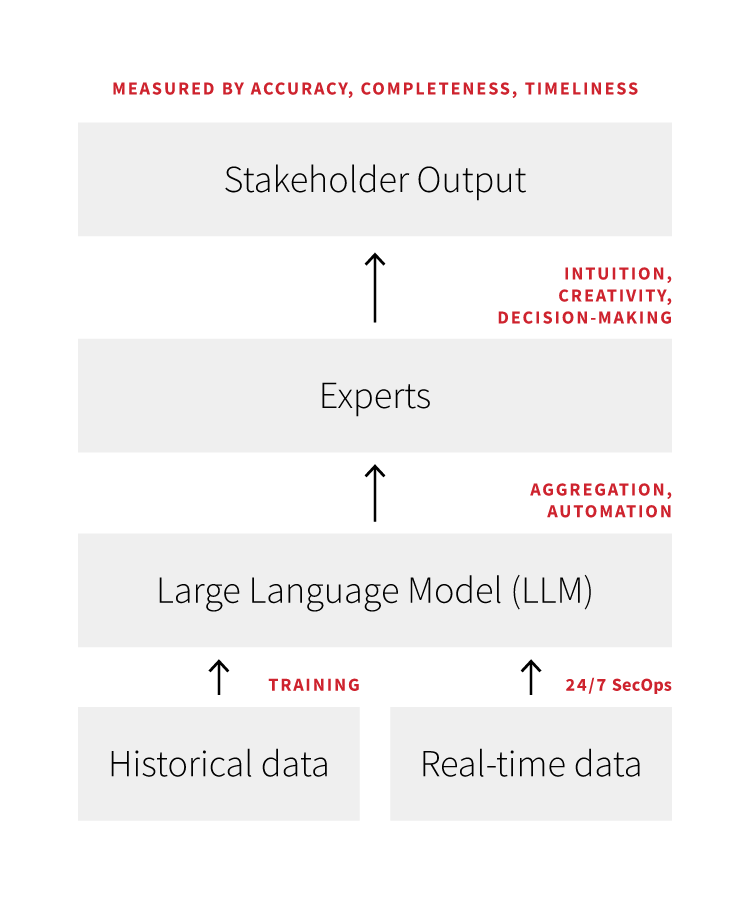

Our security operations center’s (SOC) primary goal is to provide accurate, complete, and timely threat information to customers so that we or they remediate before business impact. We continuously measure our threat accuracy, completeness, and timeliness–via internal telemetry and feedback we gather from customers through our platform—and work to improve all three over time. Because we’re clear about our goals, we know that any tool we introduce to our SOC must at a minimum not hamper our aggregate performance across those three variables, and that ideally it will yield measurable improvements to at least one of the three.

Feeding a high volume of trustworthy historical and real-time data to the large language models (LLM) that we use underpins our confidence in using GenAI. In our case, over 10 years we’ve developed thousands of behavior-based detection analytics, mapped them to MITRE ATT&CK, recorded their respective conversion rates to actual threats detected and the threats’ severity levels, and provided response and remediation guidance to customers for threats detected. We’ve also mapped hundreds of adversary and other threat profiles to relevant detection analytics where possible, and we update both our detection analytics and threat profiles continuously.

Training LLMs on such a large volume of historical data that we trust in turns gives us confidence that our use of GenAI will maintain or improve the 99 percent+ standard of threat accuracy that we provide customers. Once trained on that historical data, our LLMs work with customer data in real time; we consistently validate that our integration pipelines with customer security products are working properly to ensure that our LLMs engage with current and complete customer security information.

Our security experts are the core component of our security operations program; we provide LLM outputs to them as tools to make them better at their jobs. This is an evolution of the human-machine-teaming approach Red Canary has historically employed, where our SOC members code and use technology to make themselves more efficient and consistent. The primary route our SOC takes to improve efficiency and consistency—and the primary way they are currently using GenAI–is automating boring and repetitive tasks.

Assign boring and repetitive tasks to GenAI agents, not people

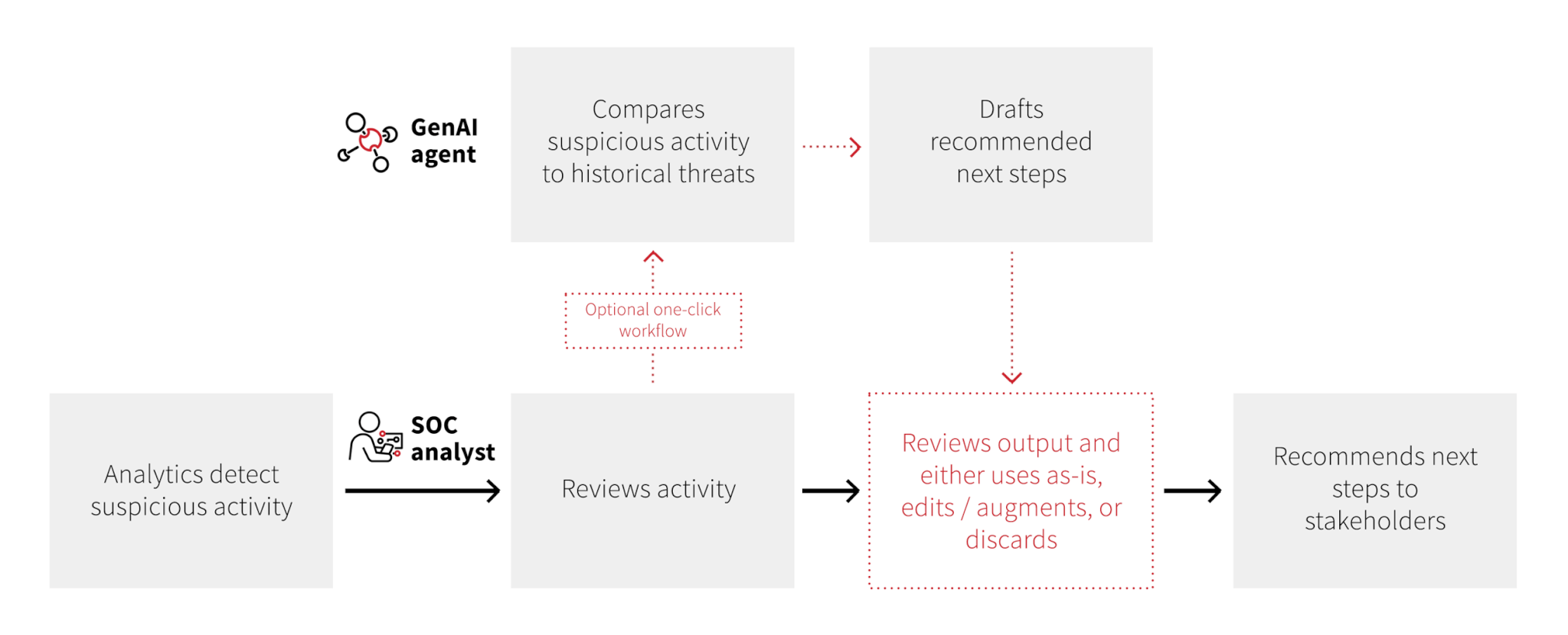

The fun part of working in our SOC is solving problems for our customers; spending lots of time doing repetitive manual tasks rains on the parade. Using GenAI agents to automate the repetitive tasks associated with detection, investigation, and response helps our SOC improve its timeliness without sacrificing accuracy or completeness.

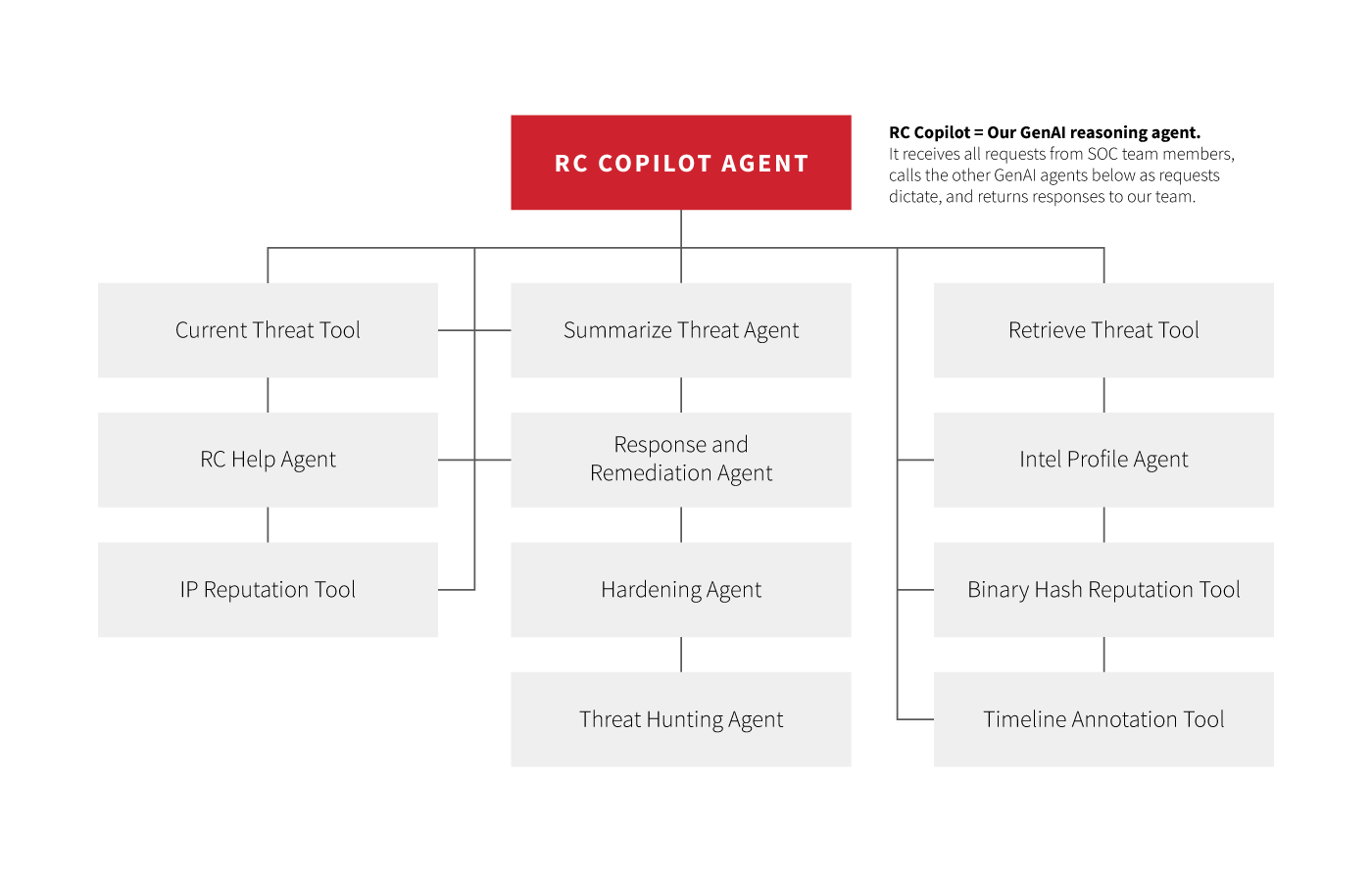

GenAI agents are essentially bots powered by LLMs. A GenAI agent receives a prompt, breaks down complex tasks into sequenced subtasks, can make external API calls to complete subtasks, and then responds to the prompt. Qualitatively, GenAI agents help our experts both get to the fun part—making a decision informed by security-relevant data—faster, and spend a higher percentage of their day doing the fun part.

When endpoint, cloud, identity, or other events trigger our detection analytics, our detection engineers investigate the suspicious information. Our goal is to have our platform automatically gather and present the full scope of relevant information to a detection engineer so that they don’t waste time gathering that information themselves. Gen AI agents are a step change for us in that specific use case—we have built an agent that with one click can parse JSON blobs and present the relevant parts in an easy-to-digest way; enrich IP information with context from VirusTotal; query our data lake or a customer’s Microsoft Sentinel instance for other endpoints or users affected; and more. This one specific use case shortens the time from alert to disposition while removing mundane work from our detection engineers’ plates.

Draft security recommendations with GenAI, validate and refine them with experts

As mentioned earlier, Red Canary has nearly 10 years worth of data on threats we’ve detected for customers. That’s hundreds of thousands of threats detected along with the corresponding response and remediation recommendations we provided. We have a large and trustworthy pool of data to train LLMs on the response and remediation, threat hunting, security hardening recommendations, and threat-specific context that Red Canary would likely provide to a customer.

Given this, we built one-click GenAI agents that can generate response and remediation, threat hunting, and overall hardening recommendations for a given threat that we detect. Our detection engineers and threat hunters have access to these agents; they can generate LLM outputs, refine them, further personalize them given a specific customer’s profile, or scrap them depending on how helpful the recommendations are.

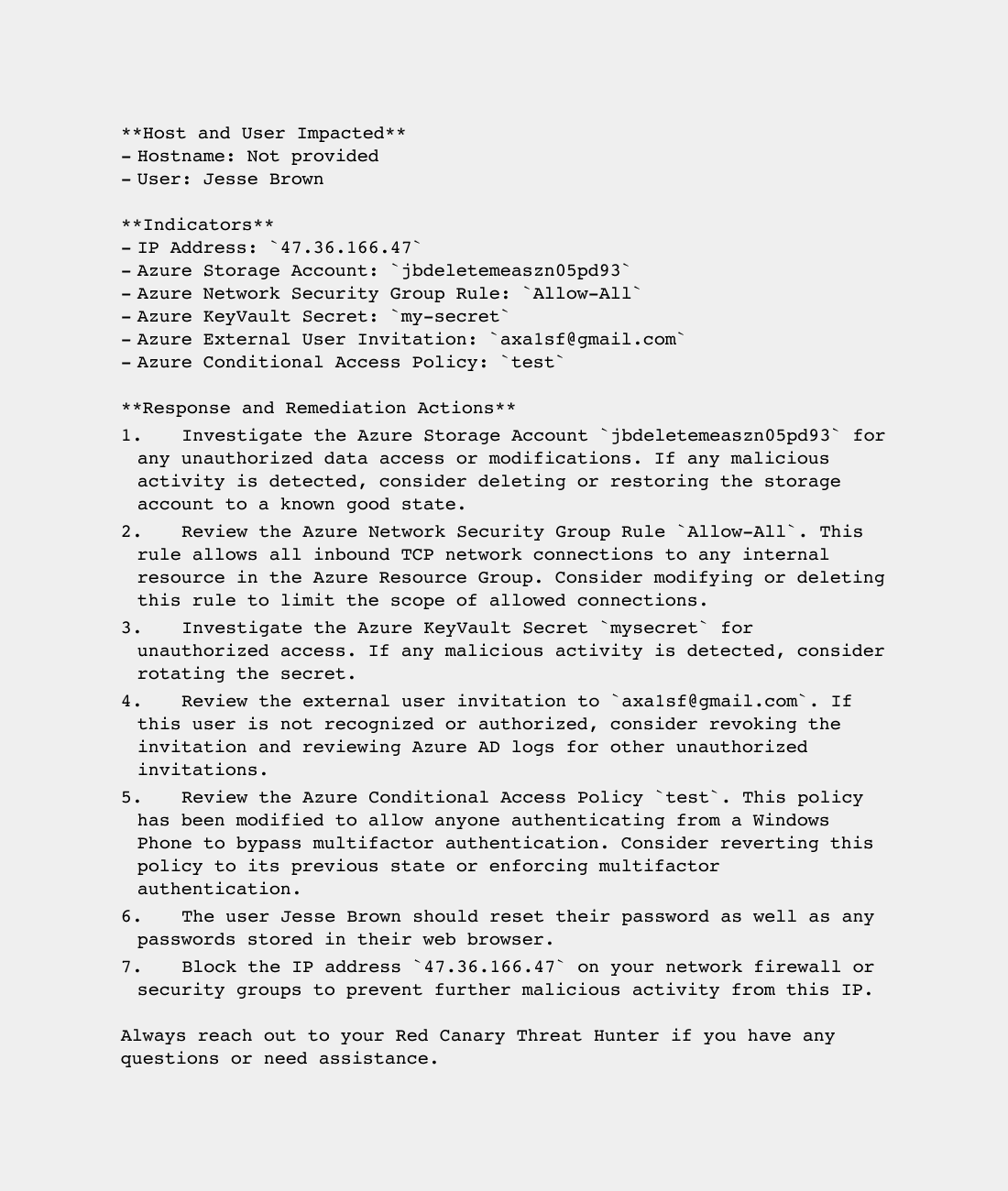

Here’s an illustrative example of how the process works. Let’s say Red Canary’s detection analytics capture behavior associated with cloud account compromise: specifically a user named Jesse Brown logging in from an atypical IP address, making network security and authentication rules more permissive, and inviting external users to a cloud resource group.

Suspicious activity contributing to threat designation

We’ve seen many of the same TTPs that comprise this threat across the thousands of threats Red Canary has successfully detected, investigated, and responded to in the past and that have trained our LLMs. Knowing this, the detection engineer working this threat can use our GenAI agent to create a draft set of response and remediation recommendations for the customer:

Initial LLM output of response and remediation recommendations

Our detection engineer reviews this output; it’s very helpful but not perfect. Some of the recommendations are too narrow, e.g., the customer should review the associated Azure Storage Account for not just unauthorized access but also any suspicious activity, and some are insufficiently prescriptive: the user should not ‘consider reverting’ the policy that allows Windows phones to bypass MFA; the user needs to revert that policy.

Ultimately, after reviewing, revising, and adding to the LLM output, our detection engineer finalizes their guidance and conveys it to our customer via a dedicated threat page and timeline in our portal. The detection engineer has the option of quickly providing feedback via survey on the accuracy, clarity, and completeness of the LLM output, and any feedback feeds directly back into our model to improve its output over time.

Final response and remediation recommendations provided to customer

This output is informed by years worth of Red Canary data and removes a significant amount of tedious work from our detection engineer’s plate. The output for our customer is receiving business critical response and remediation guidance much faster than they would without GenAI involved in the process.

Use GenAI to make your team more productive and happier

We’ve only scratched the surface of operationalizing GenAI as part of our security workflows. We’re confident that further use cases will emerge that help us improve the accuracy, completeness, and timeliness of information delivered to customers in a way that also improves our SOC members’ on-the-job experiences. Additionally, we’re pursuing opportunities to expose GenAI capabilities to customers in our platform and integrate with GenAI products our customers have already procured, e.g., Microsoft Copilot for Security. Doing so will not only improve the quality of security we provide, but will help educate our customers and provide them with an even more tailored, white-glove Red Canary experience.

If you’d like to learn more, I’ll be presenting in more detail about our approach to using GenAI on this webinar in a few weeks. I hope to see you there.