Red Canary is primarily in the business of threat detection and response, and while we’ve written a whole lot about detection, we haven’t written as much about response. This makes perfect sense if you think holistically about what Red Canary does. Very simply put, we gather telemetry from endpoint detection and response (EDR) sensors and run it through an engine that uses behavioral analytics to parse the data and raise potentially malicious events to a team of detection engineers. When they aren’t writing detection rules, the detection engineers analyze these events to confirm malice.

Once a threat is confirmed and the detection is sent to a customer, that customer can use our product to enforce certain response actions (isolate an endpoint, ban a hash, etc.) either manually or automatically. That’s a lot of detection with a little response on the end, but it also overlooks one of Red Canary’s most valuable assets: our incident handling team.

This blog is the first in a new series we’re calling Uncompromised, focusing on the stories of our incident handling team.

What is incident handling?

Oftentimes, when our Cyber Incident Response Team (CIRT) detects something really evil in a customer environment, the customer’s assigned (or on-call) incident handler will help initiate incident response plans, scoping and mitigating as necessary. However, our incident handlers aren’t merely reactive, and—unlike the Red Canary platform, which primarily analyzes EDR telemetry—the incident handling team continually works with customers to ensure that they’re getting the most out of their entire security stack.

If a customer asks, our incident handlers will examine alerts from other products, trying to validate maliciousness or determine if our own detections can provide corroboration. In the event of a significant incident, we assist by managing the incident, helping scope the compromise, and recommending remediation steps, whether we detected it or not.

We also provide tooling and configuration recommendations, customer references, and all variety of other technical guidance.

The outbreak

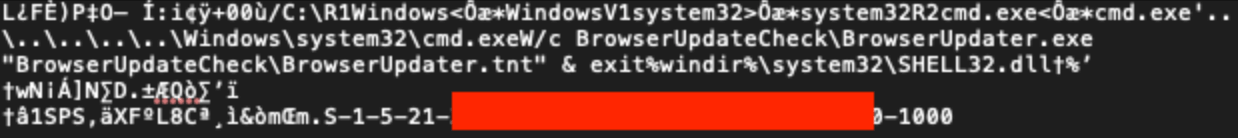

A few months back, a rogue version of AutoIT started working its way through a customer network. While it’s impossible for us to say for certain given visibility challenges in this particular environment, our best guess was that the initial infection vector was a USB port. Fortunately, the adversary never successfully delivered a payload. However, we did observe a connection to a command and control (C2) server.

In a reversal from the norm, it was the customer who informed us of this incident. We’ve since tuned some of our detection logic, and we should be able to detect similar activity moving forward. However, in this case, detection was complicated by a confluence of factors that included imperfect coverage on our end and a geographically distributed network on theirs—one where tooling hadn’t been uniformly provisioned across the entire environment.

Spotty EDR visibility also meant we couldn’t be 100 percent sure about how propagation unfolded, but we found references to LNK files that may have used AutoIT to worm around the network.

The containment

Once the customer had determined the scope of the incident, we worked with them to blacklist the hashes associated with the malicious version of AutoIT hashes in their Carbon Black Response instance and in their Meraki firewall. We also worked to block all connections to the C2 domains in their Palo Alto and Meraki firewalls.

The remediation

Once we were certain that the threat had been contained, we worked with the customer to create a PowerShell script to remove the LNK files from the environment. We also advised that they install antivirus on their network-attached storage devices, worked with them ensure that Carbon Black sensors were installed and passing telemetry to Red Canary, and used their Meraki firewalls to examine the initial attempts that the threat had made to beacon out to the C2 domain. In the end, it wasn’t clear why their antivirus software hadn’t prevented the malicious versions of AutoIT from executing in the first place.

Moving forward, the customer should be able to observe—and potentially block or detect—this and similar behaviors. For one, their firewalls are now tuned to detect incoming AutoIT executables. Further, they improved Carbon Black sensor deployment across their environment. Prior to this, an existing antivirus solution had only sporadic visibility into what was happening on USB devices, and it struggled to block banned hashes. Better Carbon Black coverage means they now have reliable visibility into file modifications that take place on removable media and over executables propagating across their network, and banned hashes are consistently blocked. We also worked with the customer to implicitly distrust the certificate authority who had signed the malicious AutoIT executable that had caused these issues in the first place.

On our end, we added one new detector that alerts our CIRT when AutoIT makes a network connection and another that triggers when AutoIT creates an unusually high number of link files—a common behavior of AutoIT worms. We also modified some existing detection logic to better identify renamed versions of AutoIT.

The takeaway

We were never able to determine what was the ultimate point of the attack, but open source research revealed that Trend Micro and ClearSky had previously linked the same C2 domain to attacks that leveraged an AutoIT worm called “Retadup” to target Israeli hospitals with an information-stealing trojan.

Regardless of the purpose or intent of the attack, this incident highlights some fundamentals that shouldn’t go overlooked:

- Security teams should try to make sure their tooling is consistently provisioned across their entire environment.

- Logs should be recorded in a centralized location.

- Administrators should be able to reliably configure remote systems.

More specifically, security teams will want to ensure that they have the necessary visibility required to observe and detect threats on their organizations’ endpoints.

When all was said in done, the incident didn’t seem to have caused any lasting effect. It also presented an opportunity for Red Canary to improve our threat detection capabilities and for the customer to shore up their security architecture.