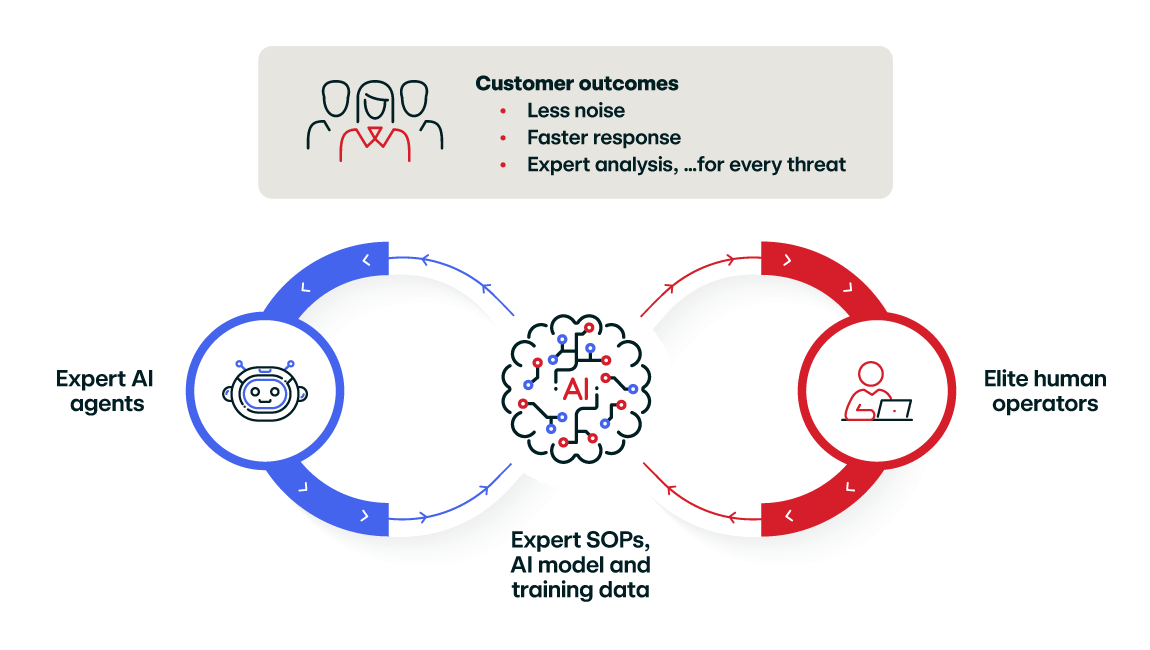

Today Red Canary unveiled a suite of expert-supervised AI agents, bringing a powerful new layer of automation to our threat detection, investigation, and response capabilities.

While every company under the sun is pushing some kind of artificial intelligence (AI) at the moment, this release is the result of years of experimentation and validation with various large learning models (LLM). Instead of chasing the hype, we’ve leveraged a long-standing foundation of unparalleled data, disciplined engineering, and deep human expertise to thoughtfully integrate these powerful new technologies.

Since implementing these AI agents, customers have seen investigation times drop from over 20 minutes to under 3 minutes, and we’ve maintained our unmatched 99.6% true positive rate.

Here’s how we got from tinkering around to leveraging AI agents in more than 2.5 million investigations, and counting.

How we got here: It all starts with data

Beginning in late 2022, the surge of interest in generative AI (GenAI), particularly LLMs like ChatGPT, created an unprecedented wave of excitement and, often, hype across industries. Many organizations rushed to adopt these powerful new tools, driven by the promise of transformation. However, the reality of implementing GenAI effectively, especially within complex and high-stakes domains like cybersecurity, often proves far more challenging than initial buzz suggests. Practical, valuable integration requires more than just access to the latest model; it demands a solid foundation.

When data scientists and AI engineers join Red Canary, they often express initial surprise. While we’re known as a leading managed detection and response (MDR) provider, the company’s core reveals something deeper: an operation fundamentally architected around a massive, highly sophisticated data processing engine. This realization shifts the perspective from simply providing a security service to understanding Red Canary as a data-centric organization.

This inherent structure is key to understanding Red Canary’s journey with GenAI. Our ability to navigate the recent AI boom wasn’t merely opportunistic or reactive. Instead, it stemmed from deliberate, long-term investments in foundational pillars: the collection and curation of high-quality, domain-specific data; the establishment of rigorous, disciplined engineering practices; and a culture that deeply values and integrates human expertise.

These elements created a unique foundation, allowing Red Canary to approach GenAI pragmatically. Red Canary has focused on integrating these technologies thoughtfully where they deliver tangible value rather than chasing trends, charting a course distinct from the mainstream rush and building on its inherent strengths.

Red Canary’s early AI/ML efforts (before the boom)

The data collected by Red Canary has always been rich and meticulously labeled by human experts, but it presented a challenge for direct AI consumption. The data structures, formats, and associated tooling were primarily optimized for human analysts conducting investigations (which is completely logical, as that’s who was consuming the data up until then). Making this data suitable for machine learning models, particularly at scale, required a significant and deliberate data engineering effort.

Even high-quality data requires dedicated engineering to become truly AI-ready.

Internal teams worked extensively to transform, restructure, and prepare the data for AI and ML applications. This involved building new data pipelines, developing sophisticated feature engineering processes tailored to security telemetry, and ensuring the infrastructure could handle the scale required for training and inference.

This foundational data engineering was a non-trivial prerequisite, representing substantial investment in making the raw potential of Red Canary’s data accessible to AI systems. This highlights a crucial, often underestimated step: even high-quality data requires dedicated engineering to become truly AI-ready.

Pre-ChatGPT explorations (2022 & earlier)

Red Canary’s AI team was actively exploring concepts related to generative AI before the technology captured mainstream attention in late 2022. Our Senior Director of AI Platform and Data Science Jimmy Astle walked through Red Canary’s early AI exploration on EM360’s The Next Phase of Cybersecurity podcast. This proactive engagement demonstrated a forward-looking approach to leveraging AI for security.

Specific initiatives during this period included:

- experimenting with training language models from scratch

- fine-tuning existing transformer-based models like GPT-2 and the BERT family

- developing custom multi-modal neural network architectures for specific security tasks

- creating embedding models to represent security entities and behaviors numerically for downstream applications

While these earlier models may have had limitations compared to later iterations, working with them provided invaluable hands-on experience and gave our data science team a head start in understanding the tooling surrounding these models before they were “cool.”

Right place, right time

The combination of AI-ready data pipelines and the practical experience gained from these early AI initiatives meant Red Canary was exceptionally well-positioned when more powerful models like GPT-3 became accessible through easy cloud integrations such as Azure’s OpenAI Service. The team wasn’t starting from scratch: they possessed prepared data assets, a codebase incorporating ML workflows, and, importantly, the internal expertise built through experimenting with earlier language models.

This accumulated knowledge encompassed data preparation for NLP tasks, model training and fine-tuning techniques, evaluation methodologies, and an understanding of the nuances and limitations of language models. Consequently, when OpenAI and others began offering advanced LLM capabilities, the Red Canary team was ready to engage immediately, rapidly prototyping and integrating these new tools into their research and development efforts.

The groundwork ensured that both the data and the team were prepared to leverage the next wave of AI innovation.

What we learned from those early AI experiments

With a solid foundation and early experience, Red Canary embarked on a pragmatic exploration of generative AI’s potential within its cybersecurity context. This journey involved experimentation, learning from both successes and failures, and adapting the approach based on real-world performance, cost, and scalability constraints.

Initial explorations focused on seemingly obvious applications, yielding important lessons:

LLMs for classification

Early attempts involved using LLMs for various data classification tasks. However, this approach proved unreliable in practice. The models exhibited extreme sensitivity to subtle variations in input wording, leading to inconsistent classifications even for semantically identical inputs.

This finding aligns with broader research highlighting LLM sensitivity to prompt phrasing and structure. The unreliability made this approach unsuitable for critical security decisions, a conclusion shared by some of Red Canary’s external partners facing similar challenges.

Chatbots

The team also explored chatbot interfaces, a popular application of LLMs. While potentially useful, significant challenges emerged, particularly for customer-facing security applications in 2023. Managing conversation context effectively, implementing reliable retrieval-augmented generation (RAG) for grounding responses in specific data, ensuring consistent memory across interactions, and, most critically, mitigating the risk of hallucinations (generating plausible but incorrect information) and inconsistencies proved difficult.

These limitations made chatbots a risky proposition for external use cases demanding high accuracy and reliability. However, chatbot interfaces found utility in various internal tools, where the context is more controlled and the tolerance for occasional errors may be different.

AI agents

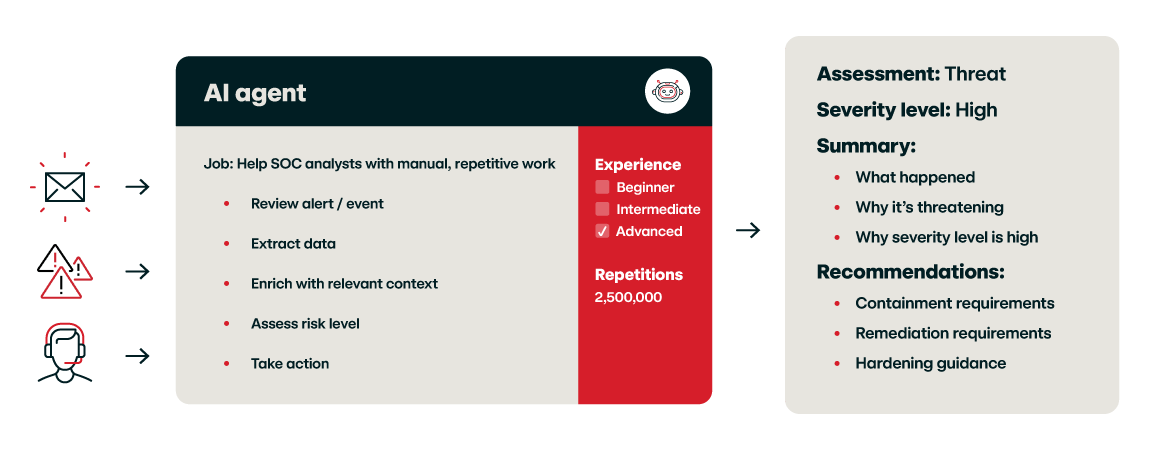

Red Canary has been developing AI agents since early 2023, exploring diverse levels of autonomy and the integration of various tools. Our initial forays provided valuable insights into the potential of agentic systems. Currently, AI agents are actively employed across several internal tools and customer-facing features.

We developed a unique approach to agentic AI, prioritizing robustness and consistency by integrating optimized workflows for certain reasoning capabilities. With the advent of more advanced reasoning models and improved cost-effectiveness, the company is strategically reintroducing greater autonomy to its AI agents.

One of the key super powers of our AI agents is to perform much of the “heavy lifting” required by our detection engineering staff in analyzing threats.

Our data processing pipeline sifts through petabytes of data to reveal investigative leads to our analyst staff, who then needs to go and determine if that lead is an actual threat or just noise. Our AI agents can now perform much of this work on behalf of the analysts, enabling them to move significantly faster through the other investigative leads with higher quality than before.

Note on terminology: We are aware of the confusion regarding different terms, such as AI agents, agentic AI, and agentic workflows. There is no current industry standard, and terminology is likely to keep evolving. Therefore, understanding the underlying concepts and how they are applied is more important than adhering strictly to specific terms.

Fine-tuning LLMs: Meet Frank-GPT

Learning from these initial experiments, the focus shifted towards fine-tuning, an area where Red Canary possessed a distinct advantage: its vast repository of high-quality, expert-labeled cybersecurity data. The team had already gained experience with fine-tuning techniques on earlier models like GPT-2 and other transformer variants, such as BERT.

A compelling demonstration of this approach was the “Frank-GPT” experiment. The team fine-tuned a large language model (GPT-3.5) specifically on the corpus of investigation comments and annotations produced by Frank McClain, one of Red Canary’s most seasoned and respected detection engineers. The results were striking: the fine-tuned model generated new threat analysis comments that mirrored Frank’s unique writing style, technical depth, and analytical substance.

This experiment was a powerful illustration of how leveraging specific, high-quality, expert-generated data could create custom LLM behavior for specialized tasks, effectively capturing and replicating valuable human expertise. This experiment made the abstract value of Red Canary’s labeled data concrete, showing a direct path from years of human effort to tangible AI capability.

Evolving agentic approaches

The concept of autonomous AI agents capable of reasoning, planning, and using tools to accomplish goals generated considerable excitement. Inspired by early frameworks like BabyAGI, AutoGPT, and the potential for more autonomous operations, the team actively explored autonomous approaches, including multi-agent systems with numerous agent-focused tools.

Early efforts showed promise. Open source models were fine-tuned to decompose high-level instructions into sequences of well-defined tasks, a key component of agent execution capabilities. Agents were empowered with “tools” enabling them to perform actions like retrieving security telemetry, querying user activity logs, and enriching data using external intelligence APIs. In controlled experiments, this led to some amazing outcomes, showcasing the potential for agents to automate complex investigation steps.

However, scaling these autonomous agentic systems for Red Canary’s high-volume, real-time MDR workload revealed significant practical limitations, primarily around cost and latency. Agents heavily reliant on LLM calls for every decision step proved slow and expensive.

An agent might iterate multiple times:

- Call an LLM to decide the next step

- Use a tool to retrieve data

- Call the LLM again to analyze the data

- Call the LLM to decide on enrichment

- Use another tool

- Call the LLM to synthesize

- And so on

While observing the intermediate “thought” processes of these autonomous agents was fascinating, the cumulative latency and computational cost made this approach economically unviable and too slow for production-scale security response. These observations align with broader findings in the AI research community regarding the challenges of agent scalability, cost, and latency.

Validating our successes

Underpinning all these applications is a rigorous commitment to extensive testing and validation of LLM outputs. Given the potential for inconsistencies or hallucinations, all GenAI-powered features undergo thorough evaluation to ensure they meet Red Canary’s high standards for accuracy and reliability, maintaining the 99 percent+ threat accuracy customers expect. This iterative process of development, testing, and refinement, always with human expertise in the loop, defines Red Canary’s practical and effective approach to GenAI.

The end product: Pragmatic, optimized agentic workflows

Faced with the scalability challenges of purely LLM-driven agents, Red Canary adopted a more pragmatic, hybrid approach. This involved strategically replacing parts of the agent’s decision-making logic, particularly the orchestration and simpler conditional steps, with traditional deterministic code. In these cases, LLMs were reserved for sub-tasks where their unique capabilities, like natural language understanding, summarization, and handling complex, unstructured data for entity and feature extraction, provided the most value.

We are still making use of various fully autonomous agents in smaller scale applications, for both internal and customer-facing outcomes, but the large-scale ones are leveraging our optimized approach.

We’ve now released the following AI agents, with more on the way:

- SOC Analyst and Detection Engineering agents: A suite of endpoint, cloud, SIEM, and identity-focused AI agents that automate Tier 1/Tier 2 investigation and detection workflows for a specific system (e.g., Microsoft Defender for Endpoint, CrowdStrike Falcon Identity Protection platform, AWS GuardDuty, and Microsoft Sentinel), delivering high-quality root cause analysis and remediation.

- Response & Remediation agents: Provides specific, actionable response and remediation tactics alongside hardening steps to reduce future risk.

- Threat Intelligence agents: Compares batches of threats against known intelligence profiles and surfaces emerging trends with supporting analysis to speed intelligence operations.

- User Baselining & Analysis agents: Proactively identifies user-related risks by comparing real-time user behavior to historical patterns and proactively escalating suspicious anomalies.

Visit our AI agent resource hub to see the full list of agents in production.

What’s next?

As future evolutions of our AI platform, we have been successfully experimenting with Model Context Protocol (MCP) servers to replace the dozens of AI agent-enabled tools we have developed. Also, with the more powerful reasoning models, we are gradually and carefully increasing the autonomy of our agents for specific use cases. We are also developing agents to analyze, interpret and convert customer feedback into actionable items to improve and highly personalize the customer experience.

Why Red Canary was ready for the AI wave

Red Canary’s readiness for the GenAI era can be traced back to fundamental aspects of the design and operation of our core product, established long before the recent surge in AI interest. These foundational elements created an environment uniquely suited for leveraging advanced AI techniques effectively.

Red Canary’s journey with GenAI underscores a crucial point: sustainable success in applying cutting-edge technology often relies more on solid foundations than on chasing the latest spectacle. Our ability to effectively integrate GenAI was not a reaction to the hype cycle but rather a direct consequence of years spent building a robust foundation. This foundation comprises several key elements:

- the meticulous curation of vast amounts of high-quality expert-labeled cybersecurity data

- the development of sophisticated, scalable data pipelines

- the cultivation of a culture blending engineering excellence with dedicated innovation and deep human expertise

The path involved exploration and learning. Ultimately, Red Canary is integrating GenAI thoughtfully by building on its unique strengths: its data, its processes, and its people. The focus remains on practical applications that solve real security problems and deliver measurable value, prioritizing substance over the allure of technological spectacle.

Our journey with AI is ongoing; the landscape continues to evolve rapidly. Red Canary remains committed to exploring and leveraging these powerful tools responsibly and effectively, always guided by its core mission to improve security outcomes for customers and maintain the highest standards of quality, accuracy, and trustworthiness.