In the first installment of The Validated Canary series, we discussed the importance of scalable, threat-focused, end-to-end testing at Red Canary. We test our systems both by verifying how they process data (i.e., how we’re able to reliably observe activity at scale across our customers’ endpoints, identities, SaaS apps, and cloud systems) while also functionally validating how we are able to effectively leverage that data to detect malicious, suspicious, or unwanted activity. For functional detection testing, we need endpoints with defensive tooling and a way to simulate adversary behaviors and generate telemetry.

Put simply, we need a way of emulating a threat or technique and validating that our systems observe or detect it. And we have to do this in a way that is repeatable and measurable. So we developed a set of tools that enabled us to do this at scale, with different combinations of operating systems, EDR agents, and other security tools. The Coalmine project started in order to take the pain out of creating and instrumenting virtual machines (VM).

Meet Coalmine

We consume and standardize many data sources, adding our own detection analytics to provide broad, deep, and confident coverage that is enriched by our unique threat research and intelligence. Coalmine is a system for automating the creation and instrumentation of VMs that will allow us to continually test multiple EDR sensors in parallel. We can use Coalmine manually or on an automated schedule that will allow us to discover unexpected behavior from our partners’ EDR sensors or Red Canary engine components, providing historic data and trends for future analysis.

We use several existing tools for detection validation, including the well known Atomic Red Team for reproducing TTPs tied to the ATT&CK framework, Atomic Test Harnesses for reproducing many variations of a technique, and Vuvuzela, our internal tool and test oracle for validating discrete activities, event types, detections, and data flow across numerous EDR sensors and cloud systems. With this in mind, Coalmine had to be easily composable with these tools, so new operating system versions, EDR sensors, or test suites would be as close to plug-and-play as possible.

Luckily, we were able to stand on the shoulders of giants and learn from existing projects such as Detection Lab and Splunk Attack Range. Both projects simplify the construction of lab environments for deep-dive analysis of endpoint telemetry across a static set of target-virtual machines. This supported threat research, but our system needed to be more like an end-to-end continuous integration for detection systems that supports multiple, proprietary EDR sensors. Thus, Coalmine was born out of the need for a more dynamic approach to building ephemeral VMs that support multiple EDR sensors.

The name was derived from the “canary in the coalmine” idiom. Before electronic detectors, miners used canaries as an early indicator to detect carbon monoxide and other toxic gasses. Here at Red Canary, Coalmine serves to create early warnings that enable us to improve our systems.

The system

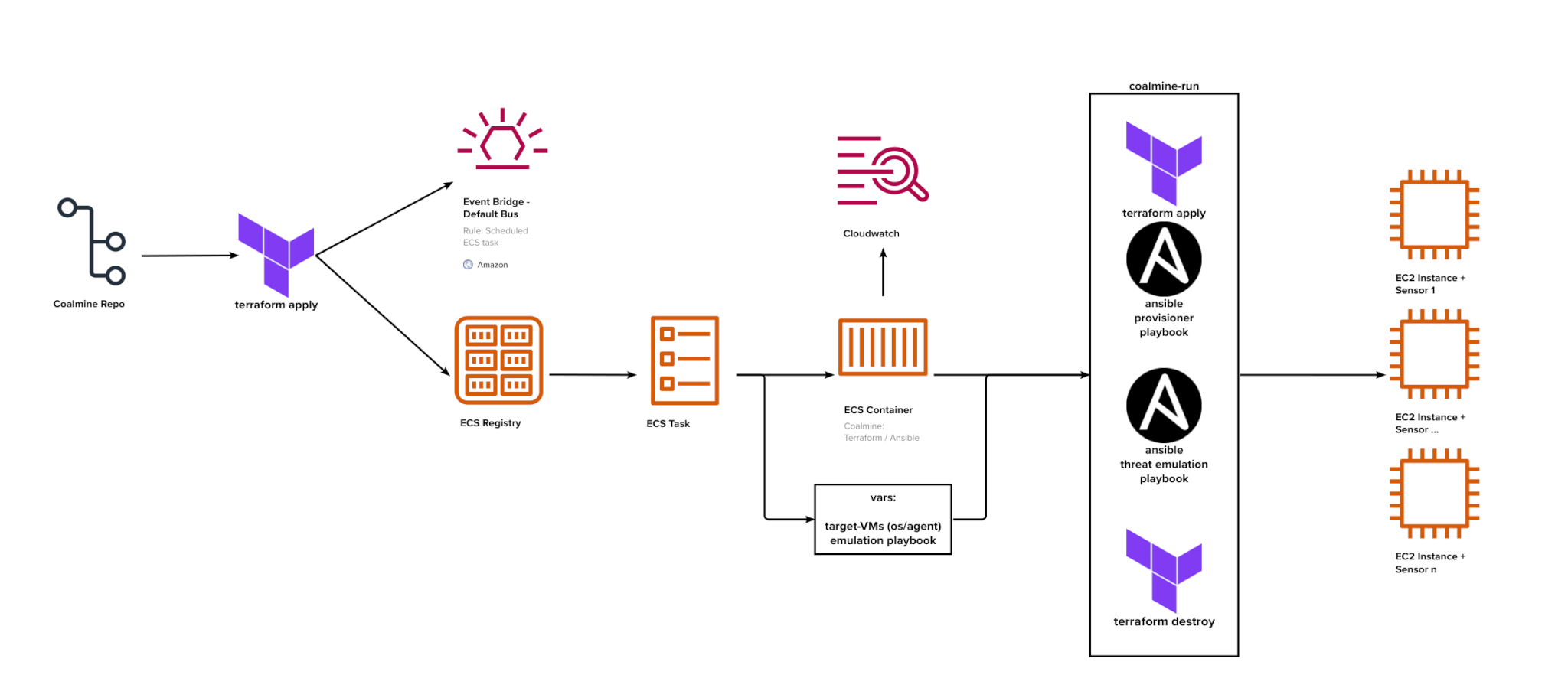

AWS and standard DevOps tools have given us the flexibility to create and package VMs with sensors and test them at scale. Terraform is an Infrastructure as Code (IaC) tool that provisions and manages cloud components. We used Terraform to provision all of our AWS components, including the target VMs we used for testing.

Once built with Terraform, we use Ansible to both configure the target environments and execute the tests. Ansible is a DevOps tool for automation and agentless configuration management, which allows us to wrap tests or test-frameworks and deliver them to targets.

In one of our tests, we’ll define a “target set” of VMs and sensors. We may want to run tests against all sensors available for Windows Server 2022. Or we may want to run one sensor on multiple versions of Windows, or another on different distributions of Linux.

We can define these combinations through variables in Terraform. When Terraform builds virtual machines, it calls an ansible-playbook (combination of tasks) to provision the VMs with each required sensor.

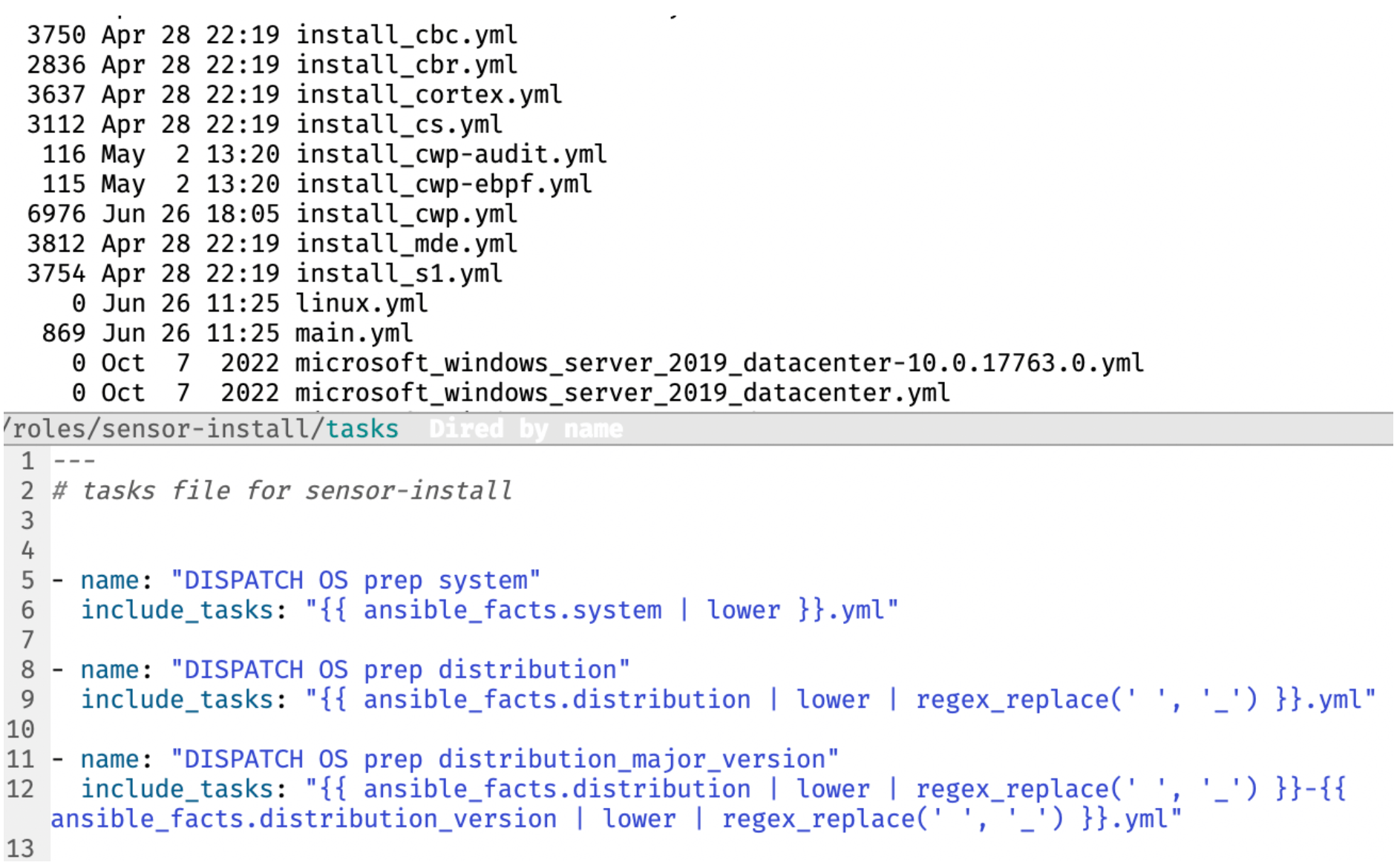

The provisioner will then use an Ansible role containing install tasks for each EDR. The tasks install the EDR with a non-blocking policy for testing by “dispatching” on ansible_facts that describe the VM and variables setting the target EDR.

Ensuring the EDR agent has a permissive policy for testing allows the tests to execute while providing visibility and feedback on what the system detects.

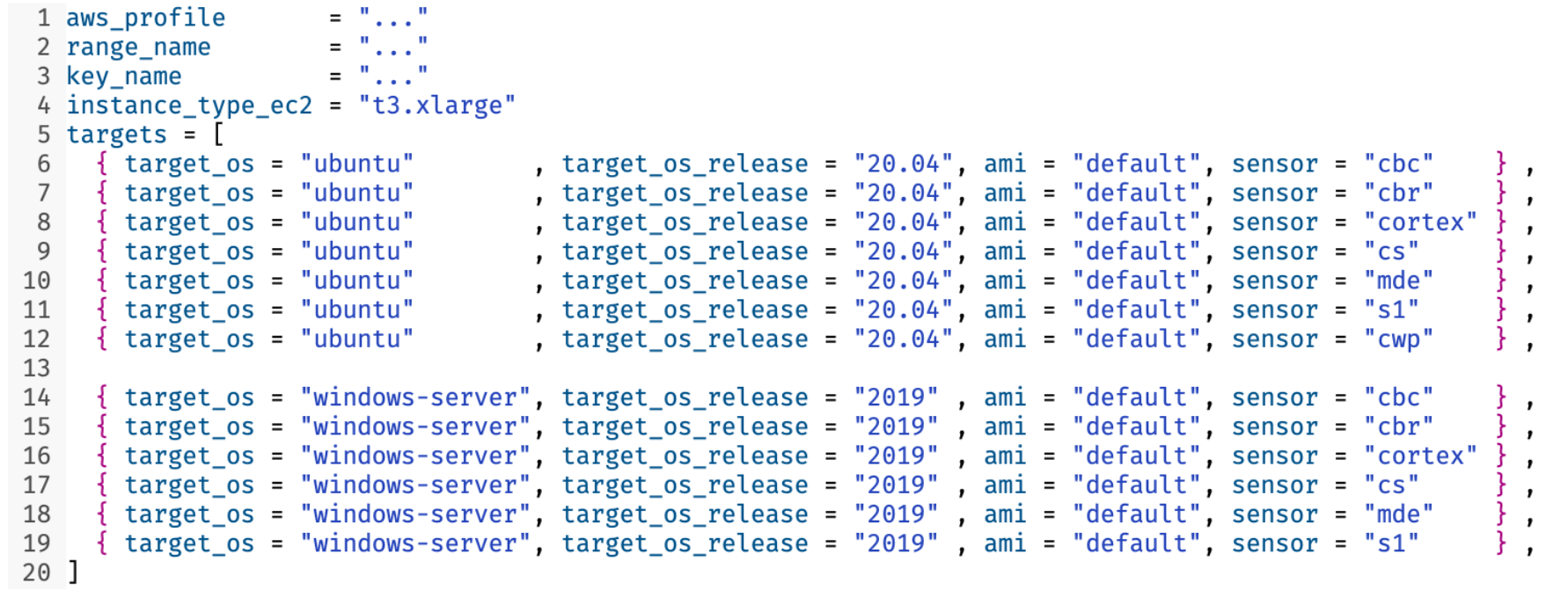

Next, we provide a tfvars file to Terraform containing a list of targets and other settings.

tfvars with a list of potential targets for Terraform builds. Actual subsets of this are templated by a shell script as needed for specific tests.It iterates through the targets, calling the modules to build one VM for each operating system and sensor combination. Once complete, Terraform creates ansible_inventory files providing information to Ansible about the target VMs using the aws_ec2 ansible plugin. This allows all ansible-playbook runs to see the created VMs and have access to all keys, usernames, and credentials required for each target. Templating the Ansible inventory for each run gave us a great tool for automation. It allows us to execute tests on different target EC2 instances simultaneously, ensuring that the tests and virtual machines from one set do not interfere with those from another set. It allows us to independently automate and compose building target VMs with executing tests. Now anything we can wrap into an ansible-playbook, we can also deliver to a target-set of VMs and EDRs.

Behavior emulation

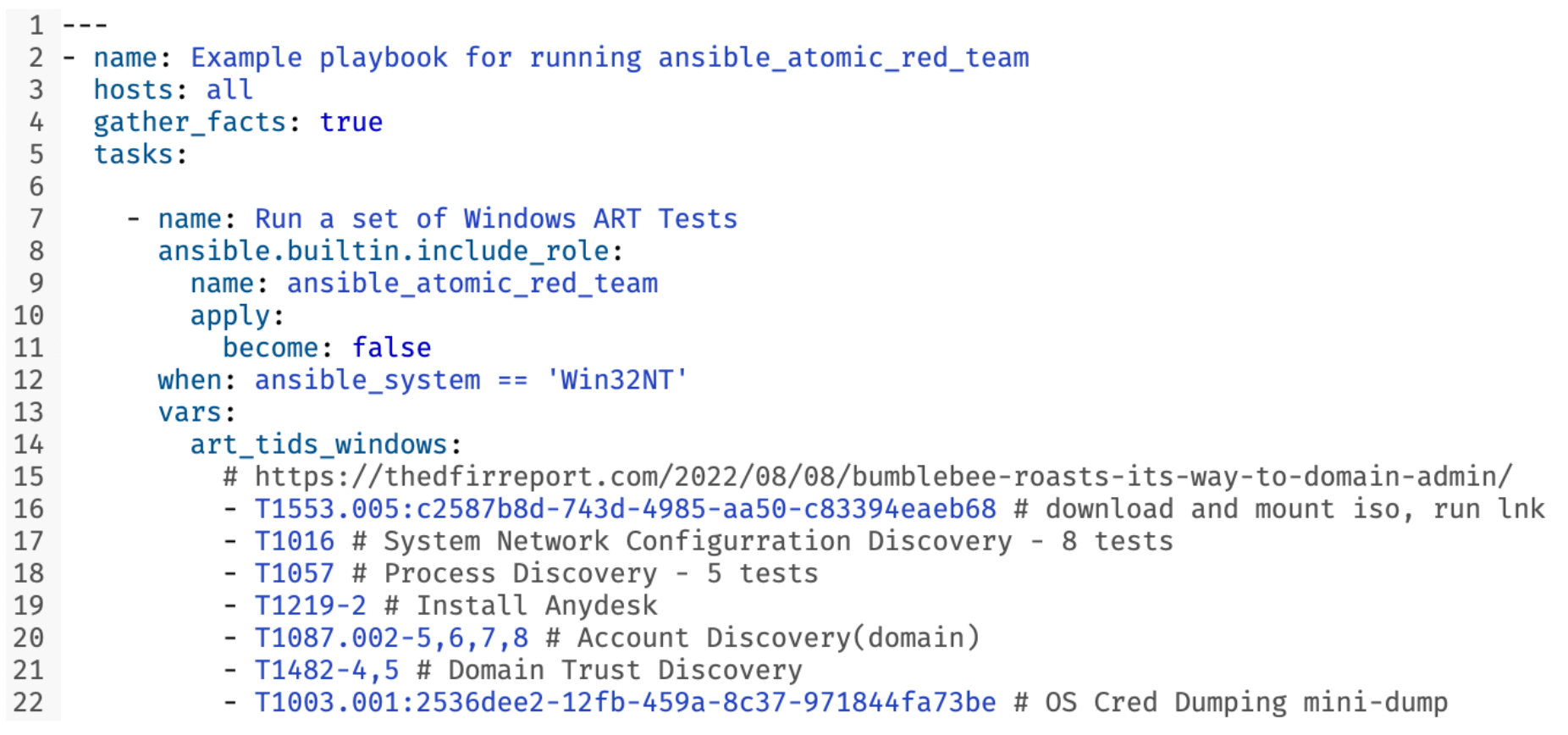

Ansible also provides an easy way to wrap together prerequisites and configurations with specific tests or testing frameworks. The first ansible-playbook we ever wrote leveraged Atomic Red Team. The playbook uses an Ansible role, ansible-atomic-red-team to install Invoke-Atomic, along with other prerequisites. On Ubuntu, Centos, and AWS Linux for instance, it installs PowerShell Core. The role then runs a subset of technique-ids (TID) specified in the playbook, minus a denylist of TIDs that aren’t conducive to repeat testing (e.g., T1070.004-8, Delete Filesystem). For each TID, the role calls Invoke-AtomicTest to run the prerequisite install, the test, and the cleanup functions.

Following the success of that initial playbook, we later added additional playbooks, reusing our Atomic Red Team role to run subsets of TTPs that generated an “interesting test set” of data—including some filemods, regmods, etc.—that were guaranteed to trigger our detections.

atomic_red_team role to run Atomic Red Team TTPs related to BumbleBee.This allowed us to generate these event-types dynamically across EDRs to test our telemetry collection and schema normalization. We recently tested the new SentinelOne Cloud Funnel 2.0 data source and integration into our platform. We were able to compare test results from our Cloud Funnel 1.0 integration and ensure we weren’t losing coverage before switching customers over to Cloud Funnel 2.0.

To dive deeper into event-level testing, we added a Vuvuzela playbook. The Vuvuzela system combines record-level telemetry generation, expected-value capture, AWS lambda-functions for analysis, and Splunk dashboards for presentation. This will be described in detail in a future blog post, but daily runs of Vuvuzela tests executed via Coalmine have already provided early warnings of bugs such as:

- regressions in new integrations or the regular development cycle before they are released to customers

- additional data sources that should be integrated into our detection engine to enhance threat coverage

- gaps in alert correlation to EDR telemetry

Automation

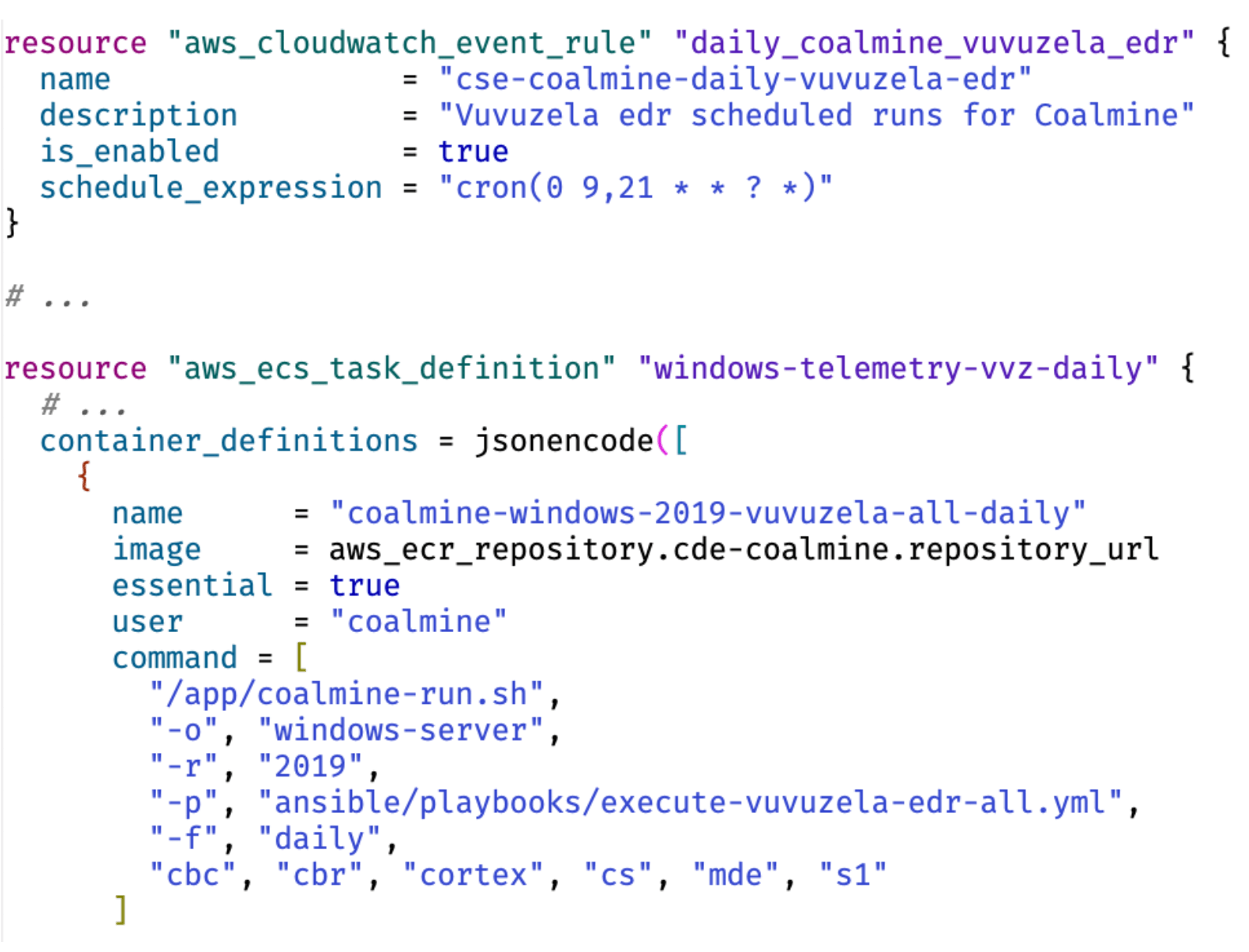

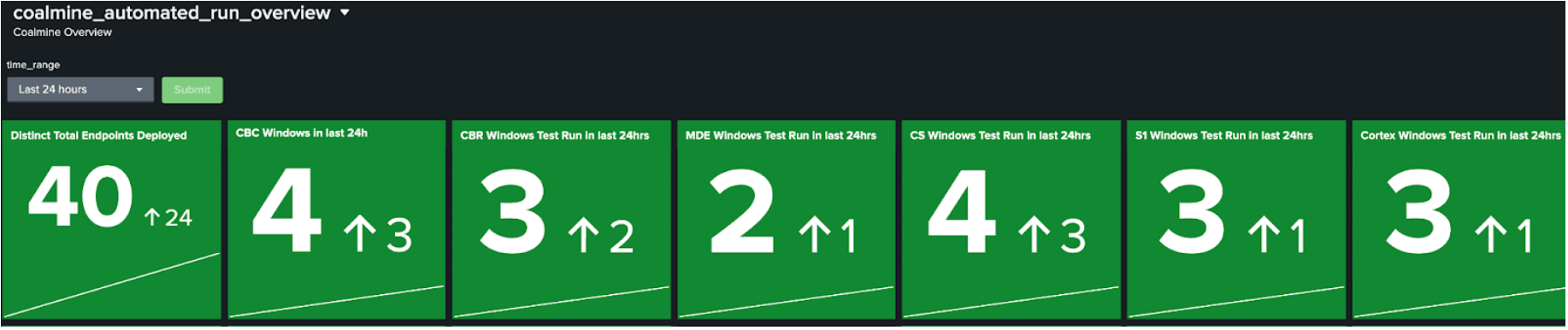

AWS hosts the execution for the Terraform and Ansible we just described with scheduling and logging. ECS Fargate provides serverless containers on AWS without requiring us to manage the container host-infrastructure. ECS uses task definitions, which allow the container to be run with different arguments and environments set. These containers can be started on a schedule defined with the AWS EventBridge Scheduler. Terraform is used to define these components, which launch Coalmine on a “cron” schedule. Currently these include daily runs of “vuvuzela” tests and the “telemetry-gen” tests. The “telemetry-gen” tests provide an “is this thing on?” quick validation of expected detections from a short list of Atomic Red Team tests.

Along with benign threat emulation to trigger specific internal detectors and Threat Intelligence IOCs, Vuvuzela tests provide a drilldown dashboard by event-type. These execute separately against all Windows and Linux EDRs. Additionally, we run targeted testing for Red Canary’s own Linux EDR.

Example of how Coalmine is used within AWS ECS Fargate containers. coalmine-run templates the tfvars, builds the VMs with Terraform, calls the emulation playbook to run against the VMs, then terminates the VMs along with other cleanup tasks. AWS Cloudwatch captures logs from each step.

Conclusion

Coalmine is one small set of many tools that help Red Canary protect its customers. We’re glad to have refined Coalmine towards the “Unix philosophy” as a simple component that does one thing well: letting us stage behaviors against sets of EDR and VM targets for dynamic testing. This process helps us “show our work” from end to end and increases our confidence in our ability to defend our customers against cyber attacks.

We’ve also seen early potential for Coalmine as it relates to threat research. Similar to the benefits of the previously mentioned Splunk Attack Range or Detection Lab, we wanted to give our detection engineers a simple way to provision test machines without dealing with the process of configuring the VMs on their own. As the project matured, we scoped this idea out and built a separate internal system—dubbed Collider—as a cloud-hosted web interface for preconfigured threat research targets.

We’re sharing the Ansible role ansible-atomic-red-team, which facilitates delivering a set of atomic tests to a group of VMs. In the next blog post in our Validated Canary series, you’ll learn more about Vuvuzela, and how it helps us deep-dive into EDR telemetry data.