Even mature security teams sometimes make mistakes. This series of blog posts addresses common mistakes based on real-world engagements with teams of all sizes and maturity levels. The author, Phil Hagen, is a long-time information security strategist, digital forensics practitioner, and SANS Certified Instructor. Part of Phil’s role at Red Canary is to educate organizations about ways to solve problems and improve their security posture.

Other posts in this series include:

- Common Security Mistake #2: Focusing on the Perimeter

- Common Security Mistake #3: Aimless Use of Threat Intelligence

We simply cannot improve what isn’t measured; this is a hard-learned lesson as old as time. By the same token, we cannot expect to secure what we cannot see. Visibility is a perpetual shortcoming in many information security and incident response engagements. Without it, the IR and broader security teams can’t expect to see an attacker’s activities, scope the compromise to determine affected endpoints, or validate post-remediation controls to ensure they are working.

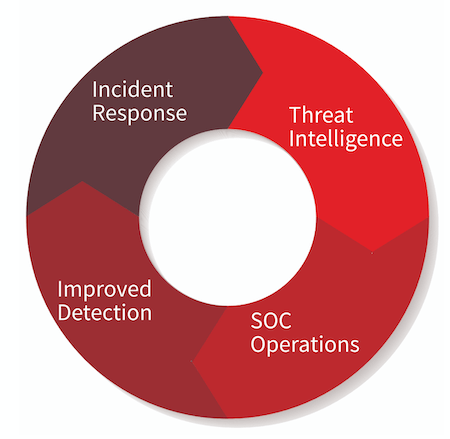

Deploying proactive visibility solutions is a technological step that supports multiple functions in the broader security process; it helps security teams to feed detection and to baseline their environment. As the IR process generates threat intelligence, the security operations process consumes that intelligence to improve detection.

This is a continuous cycle, where each party depends on the products of the other.

So how does this translate to the real world?

As with many information security topics, it depends on the environment—but there are a few common themes we can explore. We’ll take a brief look at each of these in turn, as well as some ways to achieve visibility in the enterprise.

There are two primary ways most organizations seek to improve their visibility:

1: Network Visibility

This is perhaps the first place many organizations look to increase their awareness of the environment—and for good reason. Network collection solutions are plentiful across the spectrum of pricing, and the visibility they provide can directly support IT’s operational charters. Data such as port/protocol usage, highest- and lowest-volume IP addresses and networks, common peer ASNs, most-frequently queried DNS hostnames, and the like all feed the network operations team’s need to provide reliable and fast service. Fortunately, network observations such as these also support security-related objectives as well. Newly-observed peers or queried domains could suggest a spike in suspicious activity that warrants further investigation. An IP address sending an excessive volume of traffic out of the environment could suggest an data exfiltration event.

Numerous platforms can give this critical visibility including:

- NetFlow collection architecture

- Web proxies

- Passive DNS collectors

- Network security monitors such as Bro or Security Onion

- Full-packet capture solutions such as NetWitness or Moloch

Read more about how passive DNS logging and monitoring can benefit your IR team

2: Host-Based Visibility

Establishing visibility at the host level can be more challenging than network-based because of the scale involved. While collecting network data from a few dozen points of presence/observation is a relatively straightforward process, pulling similar data from 1,000-10,000+ endpoints is a completely different challenge. Fortunately, a growing set of solutions address it. These are generally in the commercial space, given that scaling to this size is a significant engineering task.

Host-based visibility solutions include:

- Carbon Black, which can provide deep endpoint visibility such as process-centric awareness of modules loaded, network sockets attempted, filesystem and registry modifications

- Crowdstrike’s Falcon Host product

- Basic solutions such as antivirus scanners that can provide wide-reaching visibility into behaviors on the endpoint level

However, as we’ll explore next, some means of centralizing these observations is needed. Some of these platforms can generate upwards of 1M events per system per day—so while their collection capabilities are scalable, the analysis capabilities scale accordingly.

Important assessment criteria when evaluating EDR to address visibility concerns

Scaling Requires Log Aggregation

Perhaps the most formidable hurdle for many organizations to address in their efforts to acquire visibility across the environment is how to effectively manage the massive volume of data that ends up being collected. This is commonly addressed by the inclusion of a SIEM or similar log aggregation solution. These platforms collect data from across the environment, then present the data to the analyst or operator in some usable format. Often this involves some form of data enrichment, filtering, or similar tweaking of the input data to maximize its value to later processes.

However, all too often, organizations take the simple approach of “just collecting everything” before quickly realizing they’ve exceeded their SIEM’s consumption license limit, available storage volume, or both. It is therefore important to gradually add visibility to the overall security posture.

Available SIEM Solutions

Countless solutions provide this functionality—the SIEM market is quite competitive, and most SOC teams have their preferred (or strongly non-preferred) commercial vendor such as LogRhythm, Splunk, or QRadar. However, a great deal of innovation is taking place here as well. For example the open-source ELK stack (Elasticsearch, Logstash, and Kibana) has captured the attention of the information security community along with the broader data analytic space. Graylog is another open-source platform that is focused specifically on log aggregation and analytics.

Regardless of the platform, having all monitoring-related data in a central location enables quick incident response. In turn, the threat intelligence generated during the IR process feeds back to the security operations team, which can then leverage that intelligence for detection processes.

Learn how the Red Canary Security Operations Center mines endpoint data to detect threats

Long-term Data Retention Speeds Response and Threat Hunting

Finally, consider a significant non-technical hurdle to achieving effective visibility—longevity. With attackers’ dwell times hovering at a median of 99 days globally, data retention for logs and related evidence remains an important policy for any security program. To put it bluntly, you can have the greatest visibility platforms in the known universe, but if their collected data is aged out before you know to start chasing the attacker, that visibility is for naught.

Data retention policies must be extended beyond the organization’s expected time to discover and investigate an incident. Idealism is not a friend in this process—remember that 99 days is the median—half of all breaches take longer to reach the first detection. Numerous factors could lead to this value exceeding a year.

What to Consider When Establishing Data Retention Policies

While log retention policy is more a legal and management issue than a technical one, the security interest should be represented in the decision-making process. In many cases, this is a financial one. The cost of investigating a breach—especially in a regulated environment—is significantly higher without the benefit of the visibility products highlighted above and others important to the organization’s security processes. This historical data retention can also feed directly into hunt team operations (using existing data collections with new intelligence), and serve as a foundation for building rolling baselines of activity within the environment. Not having sufficient visibility will result in far more expensive investigations, and in the worst case, incidents that evade detection until massive damage has been inflicted.

Key Takeaways

If you lack visibility, achieving it should be a priority. There are many ways to establish good visibility practices within the environment, using numerous potential solutions at various price points. Make sure to approach it strategically; you need to collect usable data, have a way to access it, and store it for future use. Each organization must identify which specific visibility types will most directly address their investigative requirements and budgets.

Read more from this author:

- What to Do When Threat Prevention Fails (Hint: It Always Does)

- Passive DNS Monitoring – Why It’s Important for Your IR Team

- Endpoint Security vs Network Security: Where to Invest Your Budget