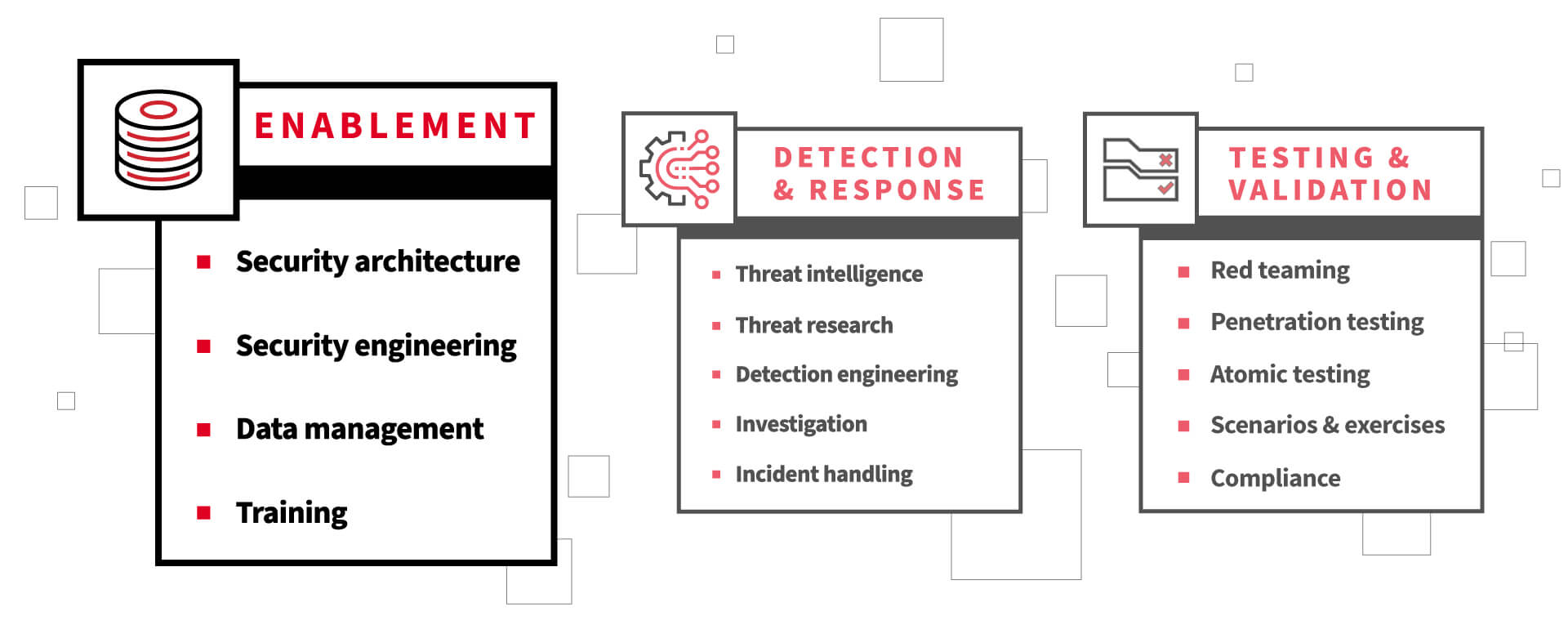

When building a security operations center (SOC), many organizations focus first on putting in place the core detection and response functions, which we covered in the first blog of our three-part series. But we still have much ground to cover on our journey to define the components of a modern, efficient SOC. We must not overlook key enablement functions.

Enablement functions exist to put the core detection and response functions in a position to do what they need to do—detect and respond to threats. And if detection and response is expected to maintain quality at scale, enablement functions are a necessity. As shown above, the key enablement functions we’ll be discussing are:

- Security architecture

- Security engineering

- Data management

- Training

Security architecture

The security architect role is unique in that it requires technology, information security, and business expertise. Architects need to understand:

- The detection and response teams’ use cases, ranging from visibility to live response

- The technology in use by the business, including constraints related to cost, access, utilization, and change management

- The diverse and ever-changing ecosystem of security products and solutions

Security architects use the above knowledge to formulate a strategy aimed at providing the other functional teams with the right data and the right tools at the right time. Given that very few organizations have a security architecture function at the outset, let’s look at this through the lens of a common and practical scenario.

Even if they don’t have a formal information security team, most organizations have a small number of very common security controls in place:

- Endpoint antivirus or antimalware

- Network firewalls

- Multi-factor authentication

As the detection and response functions mature, a security architecture function may be added to respond to requirements like this one from intelligence or detection engineering:

As an organization, we need access to more detailed endpoint activity, so that we can improve our detection coverage to include at least the 20 most commonly leveraged MITRE ATT&CK techniques.

This directive isn’t overly prescriptive, and that’s the point. It’s a high-level requirement, and there may be many others like this one related to network, website activity, or specific applications that the team is charged with protecting.

The architecture team takes all of these requirements and works with the intelligence team to prioritize them based on the organization’s threat model. For the purposes of this scenario, we’ll assume that the endpoint-based use case is the top priority, and it now becomes a project. The focus shifts to identifying the details:

- What types of endpoint telemetry are required, specifically? And if there are many, how should they be prioritized?

- What systems or processes will need access to this data? Will it be fed into a SIEM or another analytics platform for regular use? Or does it simply need to be logged and available, for less frequent use?

- What platforms or operating systems may be affected by these threats? (This is important so that single- or cross-platform solutions can be considered.)

Given this information, the architecture and engineering teams can identify potential solutions, obtain access to these solutions on a trial basis, design test cases and success criteria, and ultimately add the right solution to the organization’s portfolio.

Security engineering

Complementary to the architecture function, security engineering is responsible for implementing the organization’s strategy from a technical perspective. Initiatives for this team might include:

- Specific defensive telemetry or data sources required for investigation, primarily getting this data from the source to the destination where it can be used for detection and response

- Enablement of broad use cases, such as device identification or endpoint protection

- Integration of various controls and systems, via APIs or other interfaces

- Use cases for automating detective, investigative, or response processes

On the control side, careful hardware and software selection, methodical implementation, and good change management are requisites. Making the right moves in these areas will help guard against unintended consequences, such as disruption of business systems or processes.

It’s equally important to have sound, testable implementation of tools like the log aggregation pipeline, SIEM, SOAR, and other foundational technology upon which security teams rely. Ongoing testing—unit testing of software that the team creates and functional testing of the system end-to-end—will expose issues with data process pipelines, unmanaged assets, and misconfigurations early so that they can be remediated.

Of all the enablement functions, security engineering is frequently the first one that an organization will implement. And oftentimes, this function exists well before it is formally recognized as a security engineering team with distinct roles. Like any enterprise technology, systems need to be deployed, configured, and made to talk to other systems for a variety of reasons. With this in mind, just about every organization is already doing security engineering out of necessity. And as the security operations program matures, so should the engineering function and the quality of its interactions with other teams.

Data management

Every functional area and every individual security operations function depends heavily on data, and that reliance is deepening every day. At the end of 2018, an Okta study found that large organizations (2,000+ employees) relied on an average of 129 business applications, and this was prior to the accelerated adoption of cloud-based solutions we saw in 2020. Just collecting telemetry such as logs and alerts from a fraction of these applications is a challenge unto itself. And this is to say nothing of the volume of data available from modern security instrumentation such as Endpoint Detection & Response, network security monitoring, and other such sources.

Couple the proliferation of applications and data sources with the decreasing cost of data storage, and it’s easy to find yourself overwhelmed with even basic inventory and organization. Enter data management, an increasingly important and valuable practice for any team.

This definition of information and data management from MITRE clearly articulates the scope and key activities:

Information and data management (IDM) forms policies, procedures, and best practices to ensure that data is understandable, trusted, visible, accessible, optimized for use, and interoperable. IDM includes processes for strategy, planning, modeling, security, access control, visualization, data analytics, and quality.

In the context of the SOC, good data management practices can complement the architecture and engineering functions and help spot inefficiencies, discrepancies, and waste.

Training

Like most of the enablement functions, training isn’t unique to security. Just like any high-functioning technology organization will include architects and engineers, any highly effective organization will invest in ongoing training and education.

In its most basic form, training can look like documentation that you ask or require the team to read. And in one of the more common forms, training may be as simple as assigning team members a mentor or partner with whom they will work over-the-shoulder for a period of time. Eventually, you may implement formalized classes and programs so that training can be scaled to meet demands for compliance, quality, or growth.

In any of these forms, training fosters a culture of continuous teaching and learning. And ultimately, training will help to drive consistency and quality in seemingly simple areas that scale poorly as institutional knowledge:

- Terminology—importantly, the specific terms or labels applied to system and data primitives upon which larger systems and workflows are built

- Processes—including everything from basic change management to incident management, handling, and response

- Principles and guidelines—collected and documented by the team over time, to help understand not just what decisions have been made, but why and how they have been made, decentralizing decision-making and increasing autonomy

Lastly, specifics aside, investing in training signals to your team that you understand the work, systems, and processes that will be your collective foundation.

You can read or re-read the first article in this series here, and stay tuned for the next article, which will explore the various components of the testing and validation function of security operations. Taken together, these articles will present a detailed look at all the different functions of a modern SOC and how all these functions connect.