We are in an age of complex networks. Today, it’s common for enterprises to operate out of multiple office locations with bring-your-own-device (BYOD) policies, 24/7 access requirements, flexible work scheduling, insider threats, and so much more to muddy the already complicated world of information security. Potentially unwanted programs (PUP) might seem like a low priority by nature of the uncertainty that surrounds them, but let’s take a closer look.

As a security manager, you’ve got a lot to be worried about, but primarily you want to avoid a breach. With this in mind, you might have programs to cover the following (among other things):

- perimeter security (e.g. firewalls)

- endpoint security

- intrusion detection and prevention systems

- an incident response plan

In theory, you know the controls you have in place should detect suspicious activity and malware, but do they detect PUPs? By extension, who exactly determines what software is authorized on your network: your security administrators or individual employees?

A lot of people don’t care about PUPs, and we totally understand how PUP policy and detection can become an afterthought amid all the various things that security teams need to think about. In a forced decision between tackling Ryuk and Caffeine, any responsible security team would prioritize the former. However, we ran the numbers across our detection dataset and determined that environments with larger numbers of PUP detections could see up to five times the amount of malicious or suspicious detections compared to environments with fewer PUP detections.

Consider this article our manifesto against PUPs (and our excuse to share some adorable puppy pics). First let’s take a step back and figure out what we mean by “potentially unwanted.”

Starting with “potentially”

This implies that the program may or may not have a legitimate purpose in the environment. We can use virtual private network (VPN) software and browser plug-ins as examples, while considering an organization’s policies around them.

Scenario 1

Bob in accounting sits at a desk in a corporate office building using a computer that is protected by a segmented network and an entire suite of other security tools. Bob’s role does not require him to use a VPN (especially not one he downloaded himself from the Internet), but he’s downloaded and installed one anyway. Of course, Bob might just be security and privacy conscious, but he might also be using his VPN to send and receive some things he shouldn’t be sending or receiving. Given the potential that Bob could be engaging in some light insider threat activity with his VPN, we believe that security teams should have the ability to detect unsanctioned VPN software in their environment.

Scenario 2

Alice in IT is sick and tired of her computer going to sleep and losing Wi-Fi while her local scripts are running to update servers. However, she also values her sleep and sanity, so she’s not just going to sit overnight and move her mouse periodically to prevent her computer from falling asleep during the window of opportunity for server downtime. Since corporate group policy objects (GPOs) prevent her from reconfiguring her power settings, she installed a browser plugin that prevents her computer from going into sleep mode, allowing her to install updates overnight without having to be there. Unfortunately, Alice has no idea who created this plugin or if that person cares about security. This extension may seem innocuous, but it could pose a risk. We think security teams should have visibility into the plugins users are running in their environment.

Then we have “unwanted”

Malware is, by definition, “unwanted.” Malware may install itself with a user’s interaction, but certainly not with their express permission. PUPs, on the other hand, require the user’s consent to download and install. The term “unwanted” emerges out of the additional software that is usually bundled with these programs. These are commonly listed in the fine print of the user agreement, which almost no one ever reads. Beyond bundling sketchy software with seemingly benign software, one might also interpret “unwanted” to refer to software that a user might want to run but that the security team certainly doesn’t want them to run. Whatever the case, most users probably have no clue what any software is actually doing to their computer.

Why should you care?

- The employer is not practicing application or browser whitelisting

- If they’re not whitelisting on those, they’re less likely to be whitelisting IP, domain, email, etc.

- If there’s a lack of whitelisting as a security gap, we can assume there are security gaps elsewhere

Avoid time suck

Also consider the response and counteraction required by security analysts in an environment with loosely enforced PUP policies. Investigating unwanted software will take up a lot, if not the majority, of the analyst’s day-to-day. Untrusted software creates a lot of noise on sensors and antivirus, along with a plethora of data to sift through. Moreover, a good amount of programs classified in the PUP family are so poorly written that their behavior actually looks like malware—even when it’s not.

Part of a security analyst’s function is to conduct open-source research and evaluate the rest of the security community’s inputs on a particular artifact or tactic, technique, and procedure (TTP). This includes checking binaries against the opinions of AV vendors. This is where PUPs make things tricky: if 10 vendors classify a file as “adware” and 10 other vendors classify the same file as a “trojan,” which is it? This may force the analyst to go down a rabbit hole and perform a deeper-dive on analysis, which ends up being a time suck. Imagine the time saved by automating PUP detections so that your analysts can focus on detecting definitely unwanted programs.

Detecting PUPs

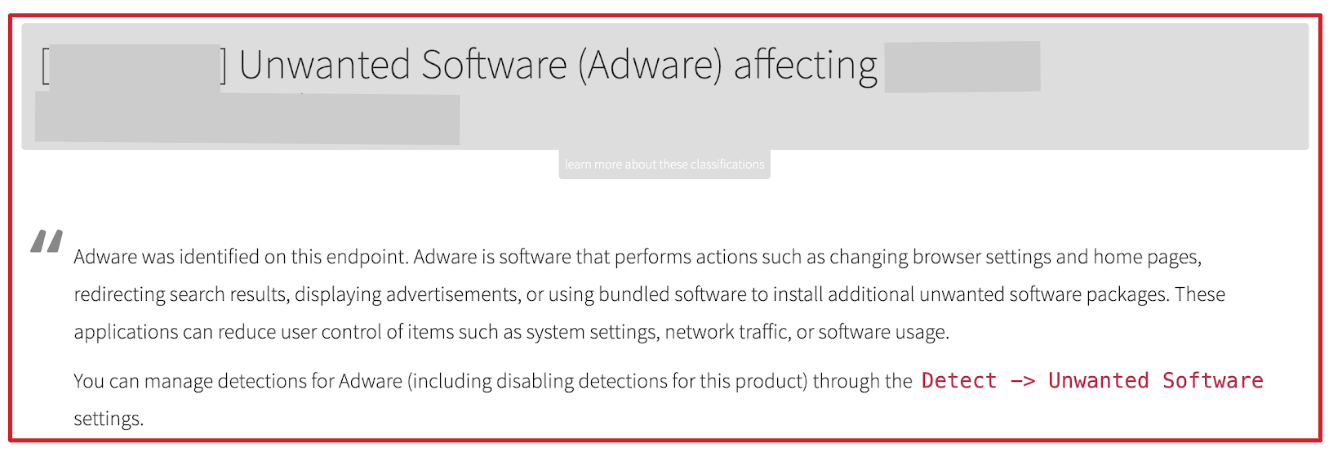

Red Canary has three main types of detections: malicious, suspicious, and unwanted (aka PUP). Most PUP detections are initially discovered by our detectors that alert on malware. Again, PUPs tend to behave like malware, and once it’s determined they’re not, they are subcategorized into one of three categories: adware, riskware, or P2P.

From here, the analyst team evaluates the potential threat of the functionality of the software in question. If deemed as a potential threat in our customer environments, we will create a product detector for that specific program. Now, we fully understand that some of these programs are allowed or sometimes required, depending on the customer environment. The CIRT makes it easy for our customers to manage their unwanted detections, with features available to mute detections on these as a whole, or just on particular endpoints.

Here’s what a PUP detection looks like for Red Canary users:

Conclusion

Endpoints with PUP detections are more likely to have malicious detections. For endpoints that received both malicious/suspicious and PUP detections in 2019, 67 percent first saw a PUP detection then received one or more follow-on, malicious or suspicious detection(s).

- Environments in the top quartile of percentage of endpoints with PUP detections saw, on average, 3.75 percent of their endpoints having malicious or suspicious detections

- Environments falling within the bottom quartile of percentage of endpoints with PUP detections had less than 1 percent of their endpoints affected by malicious or suspicious detections

This is not to say that ALL third-party utilities, browser extensions, P2Ps, and VPNs are bad. Some organizations require these types of programs, but those that do should remember to employ due diligence in researching and selecting them from an enterprise-class, reputable vendor.

Otherwise, it’s helpful to have an updated software inventory and organizational policy regarding PUPs. Beyond that, security departments should consider developing software review and approval processes, application whitelists, and remediation playbooks for PUPs, to name a few controls.